.....

..... ...

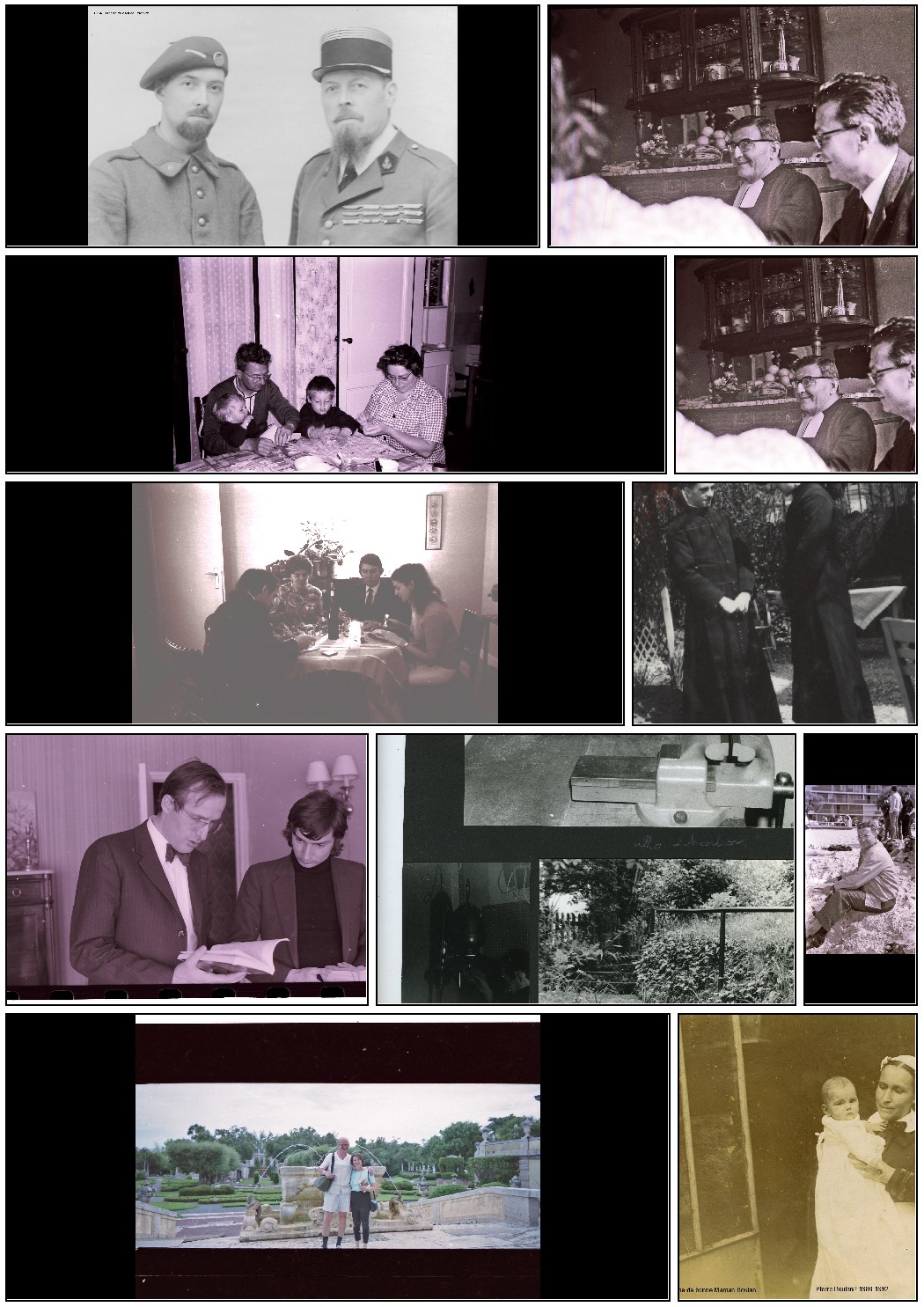

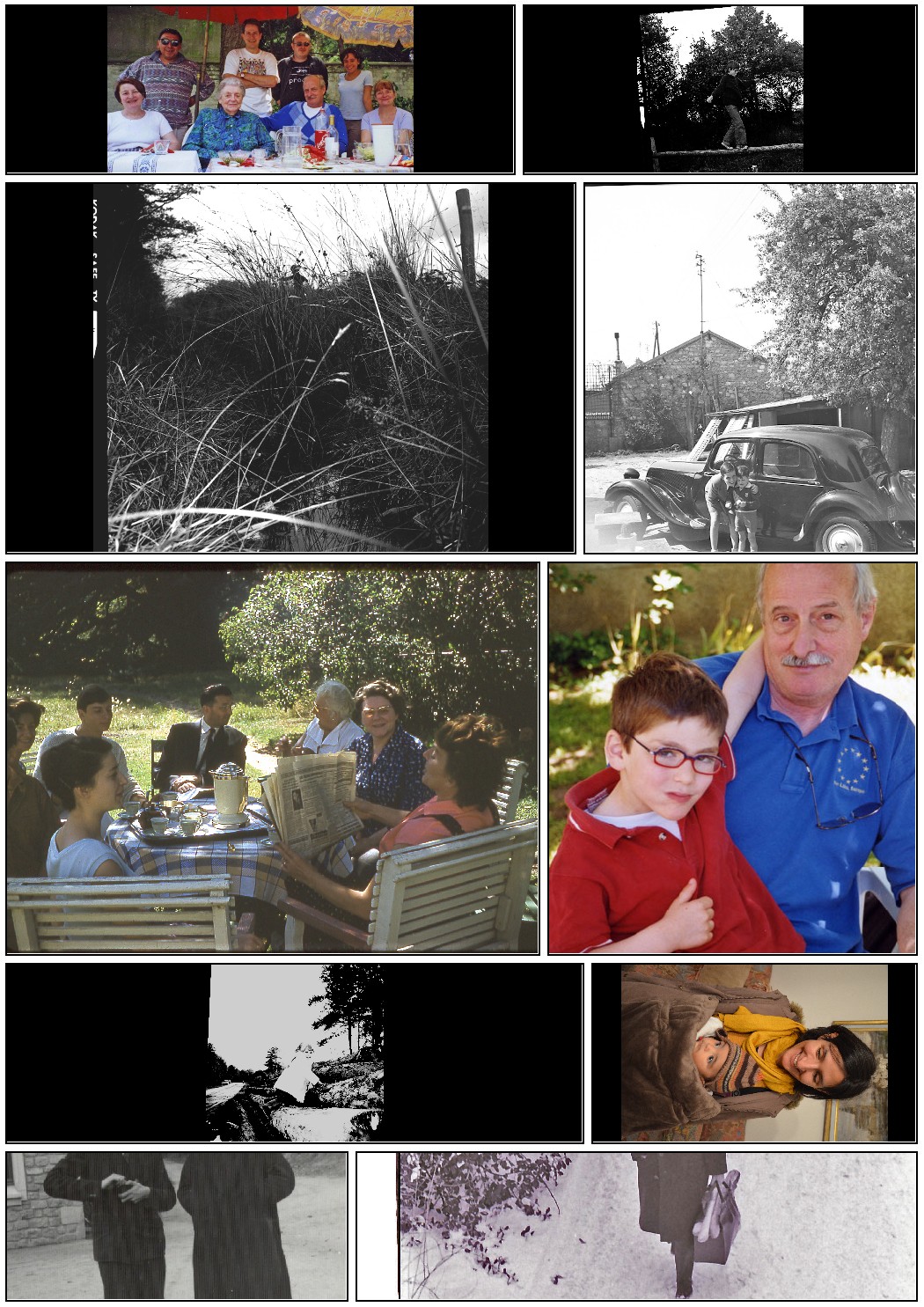

...  Example : an anwer to the demand comic ~ Pierre

Example : an anwer to the demand comic ~ Pierre Replace "random()" by identity, is the heart of Roxame.2 project, launched in Summer 2015 after 15 years of "digital art" experiments (Roxame.1) projects (Les Algoristes) and tentatively exhaustive review (Diccan).

It aims to widen the Roxame project, from a generative painting software into a "general artist", passing the the limits of "standard" algorithmic art, to explore the new spaces of computing, and its triplet of facets ; big data, deep learning, cloud. On the way, two other topics emerged : an operational modelling the concept of art and the end of random().

After one year of development, let's review the state of the project and give a look on the way ahead, technically and philosophically.

Roxame was started in 2001 and aimed to realize a "painting system" on the model of an "expert system", that is, creating original paintings autonomously. It had two criteria for success : producing images that even the programmer did'nt expect, and images pleasant enough to be presented in art shows. These two aims were reached around 2004. But nevertheless the project showed limits and opened so many new development ways that a collective endeavour seemed more appropriate than a solo venture.

The Algorists association was created in 2005 to develop such a cooperation. But it failed to reach this aim, since it could not find a project leader combining programming professionalism, artistic temperament, leadership capacity and... leisure time.

Then Diccan.com website and dictionary was launched in 2010. A new wind was blowing in the French digital art, with many artists and the engagement of a new gallery (Charlot) and new presentation spaces like the Gaité Lyrique. Then the field seemed to lack not of authors or marketing structures. Instead, we felt a need for specialized critic. Five years later, Diccan (Digital creation critic and analysis) has quite exhaustively covered the field, at the Word wide level, with more than 2 000 artists recensed and a quite complete presentation of the concepts.

But, after some brilliant years of novelties, digital art seemed disappointing :

-

Artists no longer don really new concepts or practices. They discard, in particular, the formerly hyped possibiligtes of interaction. In spite of comme cooperative efforts (mainly betweein "painters" and musicians, the professional artist model sticks to the traditional one-man solitary creation with DIY digital environment. ( With an exception, Florent Aziosmanoff, who combines creation with theoretical elaboration [1][2] and keeps developing today with his Joconde project).

- Buyers fatigue, as shown in galleries, shows and auctions. Galleries (Denise René, Charlot, Lelia Mordoch) took care of remaining open to other forms of art.

-

The concept of digital art dissolves itself. (That is confirmed in Spring 2016n with shows in the Centquatre, La Villette, The Cube, Enghien's CDA, which mixed quite anything, more or less "technological" under the blanket of "art numérique". Even highliy technical arts like film animation do'nt really play the concept and keep using the computer as a mere tool (see for instance the Siggraph publications).

Then, the work of critic and analysis published in Diccan id now quite boring. And, at a time when the digital (computing, algorithmic) field enters a new era (see [3][4] among many) it was tempting to let aside modesty and embark on some , desperate perhaps, exploration of the new spaces : Roxame.2. Having tested with Les Algorist my narrow limits as project leader, I started it alone.

New concepts and new environments take us to quite different views about software development and in particular in digital/generative art. Big data as well as deep learining and cloud take us away from the now traditional algorithmic approch. They put a deeper and deeper layer between what the programmer can think and will and what really happens and develops in the systems. That's the price to pay for efficiency, as shwo for instance the successive game successes of Deep Blue at chess (1997) , still essentially algorithmic, Watson at Jeopardy (2011) , mainly big data, and Alphago (2015), mainly deep learning.

Big data, as the name implies, strikes by its mere mass. Useful inspirations can be found in Marz & Warren [5]. With surprising assertions as "Facts are atomic and timestamped" (that is also called "event sourcing"). Data atoms become eternal. But also invisible to the naked eye. Hundreds or thousands of data lines or images can be keyed in, checked and reviewed "by hand". It becomes impossible with millions (and, for an individual, with some ten thousands). Then big data require tools for these operations, be the tools found off-the-shelf of specifically developed. Data are no longer accessed "immediately" access to your data.

Deep learning is a new name for deep neural networks. See Deng [6]. Neural networks are as old as comuputers (1940's), but "deep" is a necessary added complexity in order to deal with complex issues as well as big data. Literature on the field is quite disappointing, and does not really help to build something operational. Sometimes just an introduction, sometimes mainly mathematics oriented (Nielsen [7], for instance).

But there are cutting breaks with traditional programming :

-

the major part of a development lies no longer in pure algorithmics but in training (learning). .

- the result of the training (mainly the adaptation of the weights in each neuron) is somehow invisible and incomprehensible to the developers themselves.

Then, here also, you lose the immediate access to the behavior of the system you develop.

The Cloud is a necessary partner of big data, be it for its acquisition throughtout the World as well as for its use. But it applies also to processing, used notably by corporations. But it becomes an integral part of operating systems, and of course of the social network civilisation. The cloud also creates a distance between the user and his resources.

This "second machine age" goes along in a kind of convergence of computer science, hardware (neural chips by IBM) as well as software ( Hindle et al. [8]), robotics (Gaussier [9]) , neurosciences (Gazzaniga [10] and even philosophy (the last chapters of Petitot [11], referring to Husserl).

We propose a model which will enable us to develop our project, but keeping on mind than no model can exhaust the essence of things, and a fortiori of art.

Let's say that, like any production or creation, is the expression of a content into a format. From an aesthetic standpoint, we can distinguish levels:

- rough : the provider answers the demand as simply, easily, quickly and cheaply as possible;

- clean : the craftsman, or engineer applies basic construction rules (construction, realism, orthograph, musical chords ) mainly to avoid displeasure:

- design : the designer integrates aesthetic rules, has some "style", but the aim remains functional, practical

- art : the artist, beyond a "beautiful" answer to a demand, aims to create surprise with originality.

Art requires a "gap" between the content and the format. At the rough level, the resources are barely sufficient to satisfy the need. At the clean level, the resources are sufficient. At the design level, some liberty is allowed to the designer to use artistic criteria, style (personal, brand, period) features. At the art level, the gap is important, and possibly there is no "need" at all, other than pleasure or glory. And is filled not by fantasy or laziness, but by a novel use of resources and aesthetics.

Up the 20th century (to make it short) art could find originality in more advanced techniques, a better range of definition, colors, timbres, textures or time recording (cinema, music). This progress kept on longer for cinema, which still today tries to progress with new tehcnologies (3D, VR). For painting, sculpture and music, radically new ways had to be explored. And two ways opened :

- a radical negation or derision, of which Duchamp remains the emblematic name. This way has its charms, but by essence produce "one shot" works. You cannot long remain impressively innovative with readymades or LHOOQ annotations.

- a combination of formalization and random. Impressionism (the strong touch with its intrinsic mixture of pigments and their local assicition), cubism (starting from a Cézanne's motto), then Dada collages, Oulipo generators and so on. This way has been the main field of digital and generative art, as described by Berger [12][13] and Berger-Lioret [14].

These two ways have now reached their limits. And we propose to go beyond with what we could call "Deep Identity ", finding inspiration as well as tools in the new computing presented above and in our considerations on digital identity [15][16]. Lets use the French definition of hasard, rather the english random, chance or coincidence : an encounter of two independent beings. The independance of a random() computer function may be faked (pseudo-) if its unpredictability comes only from a deterministic but higly complicated calculation. It's more independent whet it takes into account some external phenomena such as core chip temperature or external unpredictable data (air temperature, noise, etc). But these "independent beings" are of low meaning and interest.

We think that more interesting unpredictability will come from the richness ot the "independent being" in the work of art. And that will precisely be provided by the richness of the "big data", which can consider as the knoledge and culture of the work, the deep learning, as the richness of its behavior, and the cloud, as the richness of its external connections.

That's what we are exploring, with the limited resources of a solitary artist, with our project Roxame.2

Technically, the 15 000 lines of Processing code of Roxame.1 have been pushed to 20 000. In spite of its amateur style, this code keeps well adapted to constantly evolving issues. It is a large toolbox able to welcome a regular flow of new algorithms, functions and variables. It is accessible through a rather crude GUI (purely textual, no mouse interaction). It can also be automated for "batch" processing of texts and images (or of webcam flow, though these functions are note presently operational).

Big data is implemented through a database file, of presently some 60 000 items, most of them with links on some 150 Go of texts, images. Nearly all the authors files are now accessible by this way, be them family data (50 Go), professional (60 Go, including Diccan) and Roxame's (15 Go). Of course, it is nearly nothing compared to the Google or NSA files. But, for a solitary developer, it is sufficient to experiment the limits of simple algorithmic development. Data, and their referencing, is a real issue.

Deep learning is presently beyond reach, though an elementary Perceptron has been implemented, confirming us in the ideathat the hard issues are not in code (after all a neural network can be seen as an array of weights and calls to triggering functions) but in the design of layers. But some "learning" is yet implemented.

The Cloud could rather easily (so we think) be used with web browsing functions. We let them aside for the monthes to come.

Roxame.2 presents itslef as a demand answering program, formulated as a pair : format ~ content. For example

webe ~Pierre will produce a .html text listing all the lines containing "Pierre", and giving access by links to the documents.

Contents, the expression at right is executed first. It triggers a query out of the database (or part of it, for performance reasons). At present the syntax includes

- a single word (the word at right)

- a string (the word is preceded by the $ sign

- the local functions & and | for AND

- the value of a given parameter in the formalized part of the base (date, semantic domain, location, document type if there is a link, plus some technical data and a "love mark" expressing the authors judgment and a number of times an item has been selected (that is at present the only "learning" parameter).

If the demand assigns no specific contents, or if there are no hits in the base, Roxame will elaborate something anyway.

The selected formats (a subset of the base) is then presented to the format functions.

Roxame.2 aims to provide a range of formats as wide as possible, from just one bit (there is, or not, some resource answering the query) to some megabytes (HD images, films). The following formats are presently operational, at a rough level, but for the image formats inherited from Roxame.1

- binary : boole

- text (html) : webe survey (abour a request) critique (about an image) comment (about a dico item) item (on a diccan term)

- single image (many since they were in Roxame.1) image etch kross sapla synthe4 abs0 abs1 blue_tone green_tone bronze_tone sepia_tone gray_tone dither differpix contour gradients blurr ranfuz medians median250 sharpened postes BWhite pixelz Cohenn Tifine Quadri negativ Phrases Phrases1 Abyme Clatext Contapla . These images can be produced on several dimensions (another kind of "format" , from TestD (360.288), useful for tests ,

BD (640.480), the former standard format for Roxame, then SD, HD, A4L, A4P, FHD, 4KM, 4KD.

- simple illustrated text : webi webt

- more complex formats combining text and image, some are web pages booki , critic, aiming to browsers, some others comic and guide aim to the printing.

.....

..... ...

...  Example : an anwer to the demand comic ~ Pierre

Example : an anwer to the demand comic ~ Pierre

guide is even more complex, trying to replace the poor typographic functions of Processing with CSS .

- film ( sound is not yet implemented)

If there is no format specified, Roxame will exploit its resources "at best".

The production on these formats is generally of the "rough" level, but for images, which use the algorithms developed for Roxame.1.

Upon the foundations now cast, the months to come will explore some ways to upgrade Roxame's productiosn from rough to clean, design and artistic levels. An endless task, of course. But some fascinating tracks to open in the wild.

Quantitative parameters. At present, but for boole and image, the formats have not a precisely quantitative structure. It could be precised (a book of 100 pages ...). Even for images, we could add quantitative presciptions beyond the size in pixels. For instance we could impose a level of saturation or of "complexity" (see [13]).

There are simple as well a complex ways to increase or decrease, for instance, the number of lines in the selection :

- lexical modes ; for instance, instead or asking for a word ("Paris"), we can ask for a string ("$Paris", in our qurey langauge), then getting also Paris_01 to Paris-20 ; you can be still wider with substring ( $aris, $ris, $is, $s),

- semantic modes ; that a vast if not infinite way ; there are a very simple case with dates : from one year to a decade or century. We have begun to develop a semantic tree, which would let , for example, to extend (from "dog" to "animal" then to "living being") or to restrict (from "dog" to "labrador", "shepherd"..). Or with geographic regions.

Structures. We can find structures on both sides of the demand. Formats imply structures : a range of proportions between text and image, time structures (from the basic chronological succession to complex games on flashbacks and film mounting) , subset structures (chapters of a book, events in a family...). (Somehow the order and equivalence relations in set theory).

Structures correspondence can enrich the connexion betwwen format and query.

-

Format defines a configuration, a structure. For instnce, a film is a sequence of images, which must be of some complexity and have some common feature, also a a time structure (the "dramatic" Aristotlean structure...).

-

Query's selection triggers the emergence of a structure : the answer to the query contains a given quantitu of text, of images, possibly of films. In this basket, we can detect semantic dominances (knowledge domain, geographical location, historical period...).

Emotional states . Roboticians are actively exploring the emotion topic, at least for two very practical reasons. First, some kind emotions like hunger of fear are both quite easy to model and efficient in behavior commanding. Second, expression of emotions helps to facilitate user-robot interaction, would it be for "company robots" (or pet robots) or for industrial robots working cooperatively with human workers. (This field is notably explored by the Japanese, who are much more "animist" that the Occident).

In art, that could increase the unpredictability of results, a sort of "personal touch"... We have developed a rather crude model for Roxame.1, and up to now let it aside... because when Roxame became "depressive", she refused to work...

Learning. There are several tracks.

- Just recording what one does. Roxame.2 count the access to its items. Then this is constantly evolving, it adds another degree of unpredictability. But, more positively, it opens a range of answers, from the "mainstream" use of the most accessed terms to the "original" use of the less accessed.

- If we could add some feedback of the human judgment on results, we would enter the quite traditional "learning" process, and of course the "deep learning" path.

Interaction. At present, Roxame is not well gifted on this field. The GUI is quite crude and the inputs are only by keyboard. At present, we will concentrate on a more detailed demand than the pure format ~ query syntax. It could be an interesting but demanding development, in order to foster the dialogue between Roxame and its users, let'say between the artist and her fans...

Recognition Recognition may be a quite simple and deterministic procedure. It is an application of any set (e.g. of objects, persons, images, sounds... ) into a limited sets of values. For instance, "recognize a color" will give, for any RGB value, the nearest element in a palette (i.e. the Euclidean nearest color in the RGB cube). But, on the other hand, recognition can be very difficult. It is a typical use of neural nets, as shown at length about written characters by Nielsen [7].

We hope to show more complete and convincing (should we dare "beautiful") results in the months to come. The most challenging/exciting part is the exploration of demands where the gap is important between the query answer and the format, and then where Roxame has to really make use of its inner resources to offer surprising works without tossing coins.

Thanks to Alain Le Boucher for his pertinent observations, Institut Fredrik Bull for their stimulating lectures and Galerie Charlot team for their unrelenting support to digital art.

[1] Aziosmanoff F. : Living Art. Editions du CNRS, Paris 2010.

[2] Aziosmanoff F. : Living Art, fondations. Editions du CNRS, Paris 2015.

[3] Brynjolfsson E. and McAfee A. : The Second Machine Age. Norton, New York 2016

[4] Bostrom N. : Superintelligence. Path, dangers, strategies. Oxford University Press 2014

[5] Marz N. and Warren J. : (Big Data, Principles and best practices. Manning, New-York, 2015.

[6] Deng L. and Yu D. : Deep Learning. Methods and Applications. Now, Boston 2014.

[7] Nielsen M: Neural Networks and Deep Learning. On line book : http://neuralnetworksanddeeplearning.com/

[8] Hindle A. Barl E.T. Gabel Mark, Su Zhendong and Devanbu Premkumar : On the Naturalness of Software. Communications of the ACM, may 2016 .

[9] Gaussier P. His web list of publications.

[10]

Gazzaniga S : Who's in Charge. Harper&Collins 2011.

[11] Petitot J: Neurogéométrie de la vision. Ecole Polytechnique, Paris, 2008.

[12] Berger P : Art, algorithmes, autonomie. Programmer l'imprévisible. Afig workshop, Arles 2009.

[13] Berger P : Aesthetics and Algorithms. Around the Uncanny Peak. Laval Virtual VRIC 2013

[14] Berger P. and Lioret A. : L'art génératif. Paris. L'Harmattan 2012

[15] Berger P. : Digitally Augmented Identity. . Laval Virtual 2010

[16] Berger P. : What Matters in Digital Art ? The (Digital) Soul. Laval Virtual Vric 2011.