Yield

(The text is lost. We hope to find or rewrite it soon).

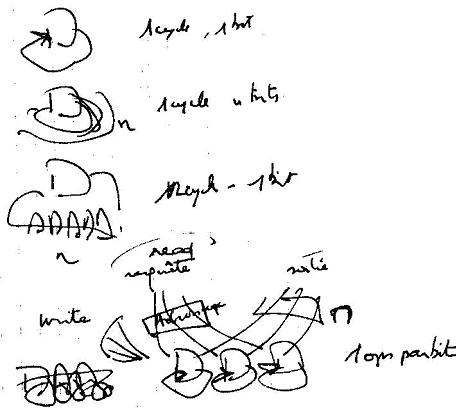

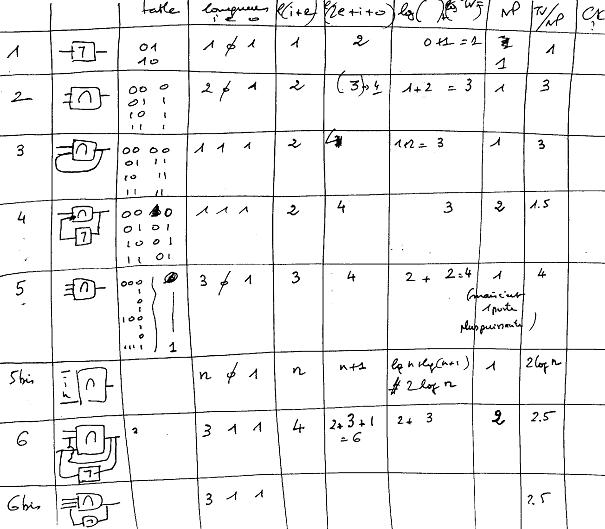

1. Bts per cycle, and gaates

10.4. Gate metrics

Gate/ops. In the simplest

case, one bit, one ops.

For one bit per cycle, we

still need much more gates than bits ? How many (we talk about memory). If the

transfer rate is one bit per cycle, then ops = capacity.

For the writes, we take the

addressing/decoding, then collect the results. We can organize the memory in

pages (more simple addressing mode). There are also the autonomous processor

emitters, in some way.

The gate metrics reduces rather

easily to ops, with an elementary gate having one cycle per time unit,

representing indeed on ops. Then we have to find the 2 exponent somewhere.

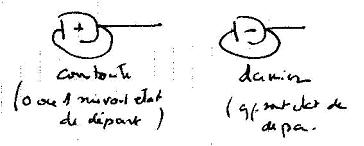

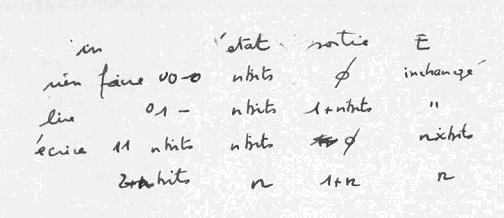

A table of bits and gates

with table truths

In/state/out

Simple cases with one gate

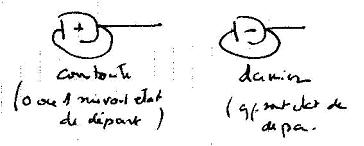

10.6. Combination of

systems and power computation

Combination of S, relations

between their L and ops is not easy. Difference and complementarity of devices, parallel and series architectures will play.

The computation seems easy

for serial mountings, if the series is unique (series of identical gates, or

negation).Easy also if purely in parallel. Beyond, it gets rapidly much more

complex !

If the processor has a

large E, it can rotate over oneself (my God, what does that mean ? ). That

relates to the autonomy problem (the longest non-repetitive cycle).

The input rate (e.g. keyboard) conditions the passing over this cycle, with

random bits input, i.e. not predicable by the processors

One more difficulty : basic

randomness of DR, negligible in general, but...

Ops of PR/ops of KB.

PR instruction-length *

frequency

KB sends only 8 bits, on a much lower frequency

since ops(KB) <<

ops(PR) we can say that KB has little influence... and then it is enormous

under Windows, a the start,

all the work done, takes several minutes, not negligible in terms of KB ops

but, after that, PR does no longer work, and waits for KB.

PR becomes totally dependent

But we could have loaded a

program which did anything (animation, screen saver...)

the length of non repetitive loops should be computable

Other ideas to

compute combinations

1st idea : fixed string

emission (for instance E$),

concatenated with a bit extracted from the E$ string

then, non repetitive length (l(E$)+1) .(l(E$)), order (E$)2

2d idea : start again,

placing one bit of E inside of itself

and in succession in every position

then we have (E$)2 * E$, that is (E$)3

3d idea : start again (2),

but with sub-strings of length... from 1 up to (E$. We have (E$)4

4th idea : emit all the

permutations of E$

For all these ideas, we

must take into account the fact that E$ may contain repetitions

Indeed, from the 1st idea above, we admit only two different strings... and we

are taken back to Kolmogorov

K(E$) (the smallest

reduction) and Ext(E$) the largest extension without external input. In fact,

this length will depend on the generation program

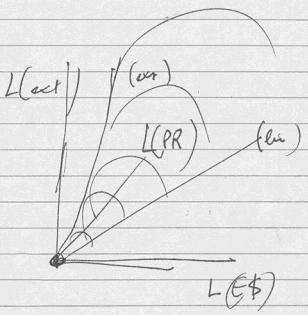

We could have L(Ext) =

f(LE$, L PR) with the limit case LE$ = 0. Then we would have a "pure"

generator

(L(PR) = program length

(something like the

vocabulary problem)

if E fixed and l(E) = l(PR)

+ l(E$

with an optimal

distribution. But L(PR) is meaningful only if we know the processor power.

We need third element L(comp) L(PR) L(E

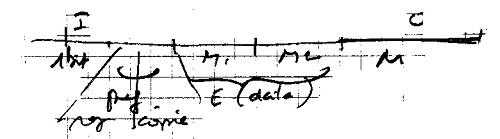

Processor-keyboard

combination

The global ops computation

must include "dependency factors". The result will be in between

- ops the smallest

- product of the ops

- sum of the ops

If constraint factors are

weak, the sum of ops will mean little if one of the components is more powerful

than the other one : (a+b)/a = 1 + b/a

Screen/processor

combination

Here it is much more

simple. The smaller is the most important, with the constraint included in the

graphic card. But L(PR) gets meaning only if the processor power is known. We

need a third element

L(comp L(PR) L(E)

If constraint factors are weak, the sum of ops will be of little use if one the

two components is more powerful than the other one : (a+b)/a

= 1 + b/a

Memory/processor

combination

PR-Mem (exchanges)

1. K There is no external alea, we deal with the whole.

2. Mem is not completely random for PR, since it

writes in it.

That depends rather

strongly on the respective sizes

if Mem >> PR, it rather like a random

if Mem << PR, no meaningful random

In between can be developed

strategies, pattern, various tracks. We would need a reference "PR",

then work recursively.

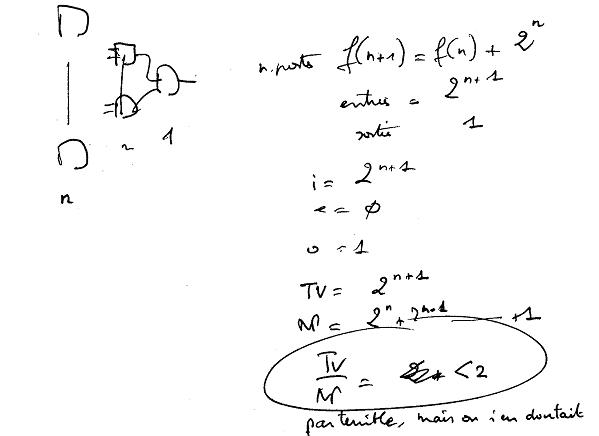

There is some indifference

TV/NP. The relation may be very good if we take the good structures. Then Grosch and Metcalfe laws will apply (and we have then a

proof and more precise measure).

Conversely, if we work at

random, the relation is downgrading. There is a natural trend to degenerescence (DR, or Carnot laws).

Conversions:

- we can define the gate system necessary to get as output a defined string,

- we can define a gate system as a connection system inside a matrix (known,

but of undefined dimension) of typical gates

- we can define a system of gates of communication lines through a text

(analogue to a bit memory bank

Then we can look for

minimal conversion costs, a way of looking for the KC. The good idea would be

to look for a KC production per time unit... not easy...

A typology of input/output correspondance ?

Relations input/output

Relations input/output

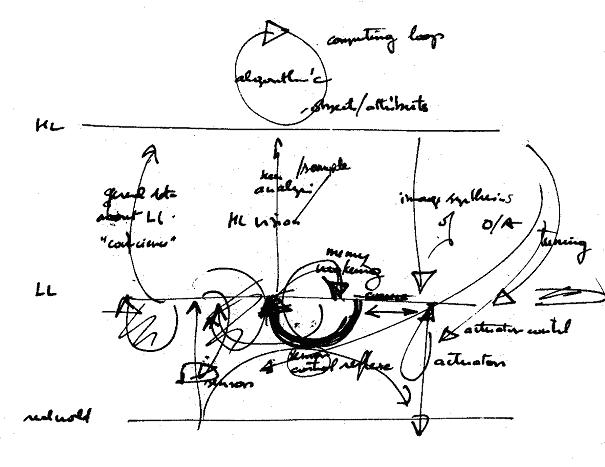

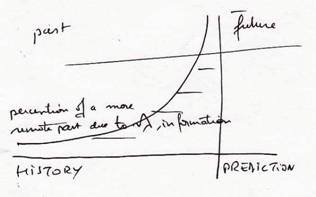

6.9. Model

The term model may be taken

as just a synonym of representation. Its main acception in DU : a set of coherent assertions, generally with some quantitative

relations.

In a more particular sense,

a model represents a dynamic or at least changing system, generally with inputs

and outputs. The model is said to explain the being if it lets not only

describe at any time, but also predicts the behaviour or the modelled being

(possibly, imperfect prediction).

a being is said

"explained" if there is a model of it. The lengthy of the model is

the KC (or at least a valuation of the KC) of the modelled being. Normally, a

model is shorter than the object it describes. Then the yield is the ratio:

length of the model/length of the modelled being, the quality of prediction

taken into account.

Efficiency in processing

would be another expression of yield.

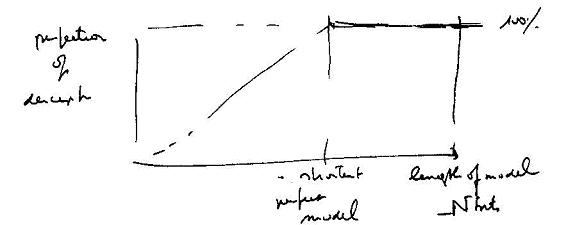

The performance of a model

may be evaluated automatically. It can evolve. There are different cases,

depending on the availability of external information on the domain modelled.

Or worse, when an enemy attempts to conceal as much as hi can.

If the model operates from a source, a critical point will be the sequence length. With possibly several nested lengths (e.g. the 8 bits of a byte, and the page separators. Burt there are other separators than those of fixed length systems. Find automatically the separators, etc.

Perfedtion or description

Beyond a perfect, lossless model, any growh of the model lenght is useless

????

Processing depth in

the processor

Total implication of a

human being giving its life for a cause.

If a being is dealt with

bit per bit with no memorization, the depth in the processor is minimal (1).

If a being is dealt with as

a sequence of parts, the depth in the processor is, as above, the length of an

element or several elements. But possibly the processor will have first to

store the structure, and May also, somehow in parallel, make some global

computing, let it be only the number of unit records

If the totality of the

being is first stored and internally represented, with a processing taking its

totality into account, the processor depth is the ratio between the size of the

being and the total processor size. (...)

An absolute depth would be

something like a human being, up to giving its life. Or even the "petite

mort" in sex intercourse. The intensity of replication. Core replication

in life reproduction,

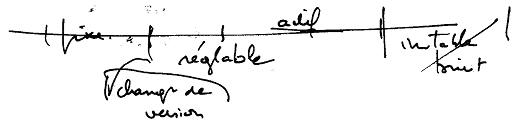

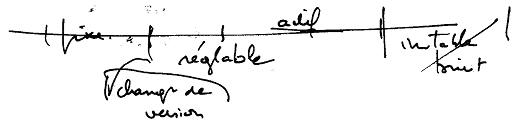

At the other extreme, a

computer never implies the totality of its content to an operation. A system

has a classical distribution between fixed functions, versions, active moves,

instable regions, noise.

Then do could be analyzed

in layers, as well for hardware (CPU, peripherals) as for software (BIOS, OS,

application programs, etc.). And more generally, in relation with the different

kind of architectures (layers, centralization, etc.).

By comparison to this

classical distribution, we should be able to qualify the parts of the system,

from very difficult to modify (hard) or easily modifiable (soft) if not

unstable. We could look here for the role of

- dematerialization (localisation, re-wiring)

- virtualization (memory, addressing, classes)

- digitization (reproductible, mix logic/data).

Relations between

the two "dimensions" of depth

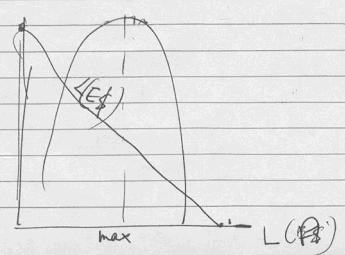

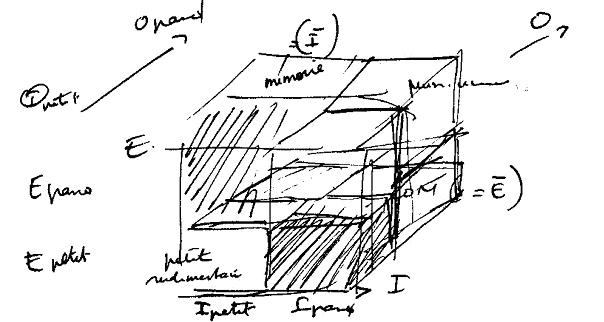

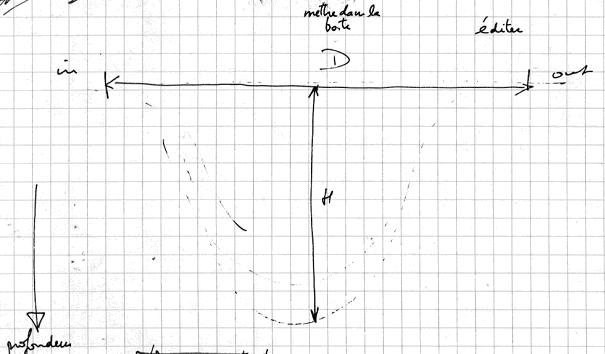

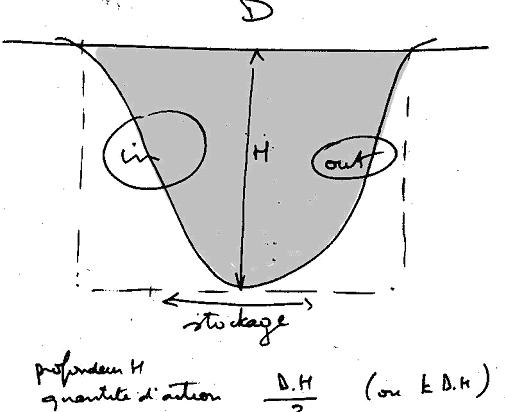

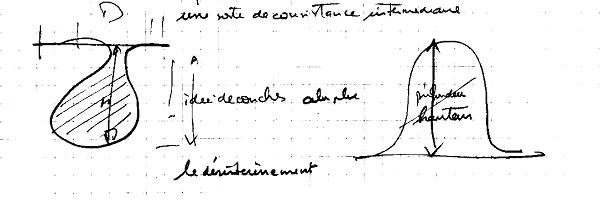

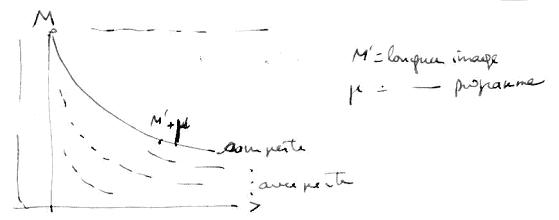

We could propose a model

such as the following figure, with Do as a distance between input and output,

and Dp the proportional depth into tye processor.

The ration H/D could qualify, for instant, a kind of process/processor. Note

for instance that a deep Dp is useless if Do remains

low...

The surface, let us say Do.Dp/2 would give a measure of the processing quantity

implied by the processing of one element. We could look for a relation with

measures in ops, for instance with a multiplication by the flow rates (average,

or other combination for the input/output rates).

Depth Limits

The core cannot be reduced to

one bit. Still less to a Leibnizian monad. There is

always some surface, some minimal lop.

Special attention should be

paid to "total self reproduction", like in living beings. And to

think more about the fact that, in artefacts, self reproduction is so

absolutely forbidden.

A form of total depth would

be the transformation of the being in a set of totally independent, orthogonal

bits. These bits could then be processed one by one, or combined with a similar

being, and the result be used to synthesize the new being. That is nearly the

case of sexual reproduction, but for the facts that

- the genome is not operating bit per bit, but gene by gene (a gene being a

sequence of two bit equivalent pairs).

- life reproduction implies not only the genome but the totality of the cell.

Limits of parsing

- co-linear with the process beings ; minimal sequence of bits ; the being/flow

cannot be totally "destreamed"

- transversal ; minimal operations

- non-recognition, residue.

Here we have to fight

against a view, or a dream, that we cannot totally exclude or our minds : the

idea that there is somehow and somewhere an ultimate nature of things which

would at extreme be beyond words. The Leibnizian monad is a resolute conceptualization of this idea. "Dualist" thinking

can elaborate on it. But it is beyond the limits of our study here, where

digital relativity or digital quanta impose limits to the parsing, and a

minimal size to the "core" of a process as well as a processor. (Shaeffer p. 45).

Depth is also hierarchical.

Level in corporation structure. Escalading in maintenance and repair

operations.

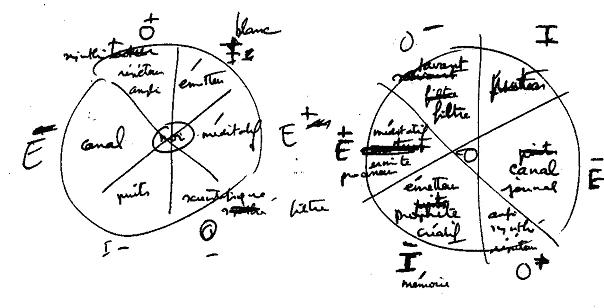

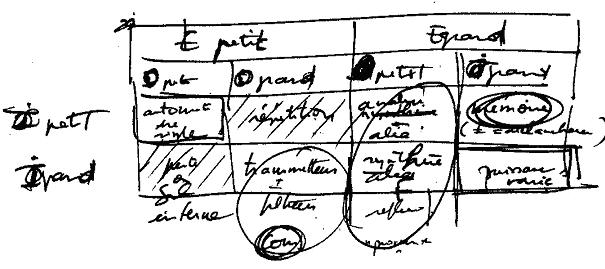

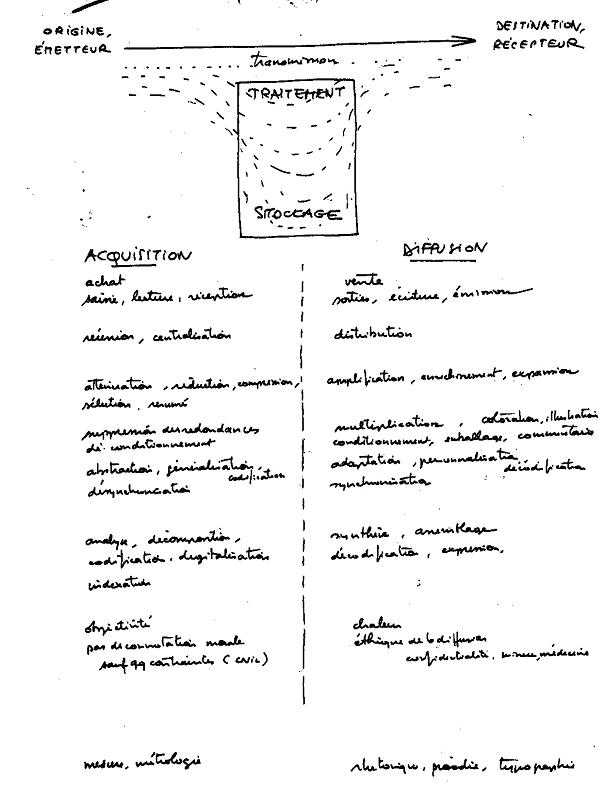

Another view of I/O and depth

Acquisition and diffusion

A sort of intermediary consistency

I we relate that to resonance anc mystics, there is some possibility of an infinite depth

Relationships between I and E ?

Changes...

A general scheme. Of what ?

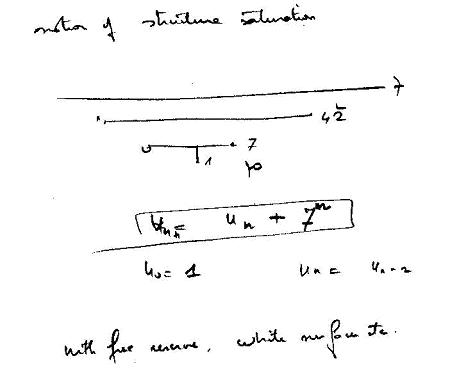

Notion of structure saturation

Bacck to gates

Gates combinations

6.16. Representation metrics and yield

See also the chapter "Truth"

A representation is not necessarly shorter than the represented. In particular, when an object has just been instanciated fror a class, there are much more things in the class than in the objecdt proper !

Compare different representations, more analogic or digital (naval battle)

En particulier, comparer le rendement du digital et de l'analogique (bataille navale)

To represent some bitmaps of length n, we can store all the bitmaps of this lenght, and code them (code length log2(n) ). That is very heavy in storage ( n.2n), but very rapid in generation.

If we have to generate the strings of n bits, and are interested only by c

strings among the 2b possible strings.

The table is of length is b.c.

The calling word is of length log(b+1)

We should hefre study the optimal triplets : object family/table/programs.

That could be done at three levels : program number, program table (or table

number).

Nota : human language analysis is difficult here, since it combines written and oral language.

6.17. A representation adapted to aims

How to store representations for an automatic vehicle with GPS. a cruise missile

?

I shoud say : a net of points/vectors, plus terrain modeling

important aspects : obstacles, standard routes

internal role of representations (dream, imagination, fancy, entertainment,

sex fantasy

by definition, a representation is not supposed to be used for itself, but to

replace (represent, representative) another object or set of objects

truth is not the only valuation of representations

also pertinence, and more generally conribution to L

- as a reserve in long term memory that S will access in times of some problem

to be solved, or in a time of under-stimulation

- as a tool to control the universe

a major aspects is sharing of representations (and other resources)

language, convention. even with oneself

etiquette

also symbols, codes, secret codes

whith who do I share ?

the dominant imposes

"common knowledge"

Internet major role

catalog, nomenclature, types, classes (SDK) select among these resources to

build

life systematics. all the large systems of names

thesaurus

autonomy and motricity of representations. see time

In the traditional world, an enormous ditch parted representations from the represented objects. From mass and energy standpoints, there was no common scale between a text or a painting and the person or the country described. The difference was aslo borne from the difficulty, and often the impossibility, to duplicate the thing, when a copy of the representation was comparatively easy. (That is of course less true for sculpture or performance arts). "The map is not the territory" was a fundamental motto of any epistemology.

The Gutenberg era still widened the gap, notably with massive reproductibily of texts and images. And even within this field, the proress of printing in the 20th century added high quality color to cheap prices, as one cane see in any magazine shop, if not even in the daily newspapers.

In DU, as long as connection with material word is not needed by human beings, this gap vanishes. A exact copy is possible at low cost. Representations of objects may have a role or mass reducing for easer use, but remain in the low materiality of electronic devices.

In parallel, human beings have learned to enjoy representations, up to the point of live purely electronic ("virtual") worlds, where representaions stand for themselves, and the maps no longer need a territory to be valuable, though calling for appropriate scale changes for human efficiency or pleasure

of what else ?

Sound.

a succession of values in time, with sturcture, phonological or musical.

inappropriate for DU. was useful in material world (rather easy to generate,

less to get batck)

as autonomous, S cannot be a representation of anything else than itself :

a representation is dependent : it must be "faithful" to the represented

8.27. Yield

(in respect to matter)

The yied is fist perceived in the metric relation between organs and functions. We look here for a first approach, purely formal and digital. It will developped later (see autonomy, etc.) in transcendantal values. Then, in an historical analysis, in the geral law of progress.

Other definition : yield is the ratio functional value of a system (more precisely,

subsystem) and its cost.

It is not mandatory, to study this law and its effectiveness on terrain, that

cost and functional value be measured with same units [Dhombres 98].

Nevertheless, a proper generalization of the law must afford to compare and

combine different technologies. Then we must lookd for as much generality as

we can.

We propose to use, for denominator as well as for denomiateur, the KC.

For the numerator, we will talk of functional complexity. It is the variety

and sophistication of the function of the system, or of the different functions

that contribute to its value. We could join wih the value analysis concepts,

but this methode is seldom formalized as much as we need here.

For the denominator, we talk of organic complexity, id the number of organs and componets and the sophistication of their assembly as well as their elementary complexity.

8.26. Supports and channels evaluation

Sensorial saturation

4 view from 1 to 3D

2 sound

2 forces

1/2 smelling

1/2 taste

TOTAL 10

Quality/fidelity, resolution

1 minimal for a recognition

2 rough, lines

3. realist, line

4. very fine

5. perfect

Interaction level

1. purely passive

2. choice, swap

3. joystick

4. intervention in scenario

5. creation in depth

Computing power

2. micro

3. mini

4. maximum possible

Price per hour.

The values interaction will settle itself in the contradictions.

The DR or "structural superabundance" is more important that the other

DR (notably the basic material RD). Soon, there will be a sort of chaos by over-determination,

H ence a large "chaotic" space where values can be applied, sense

appear

Error = a kind or error to be reduced

we are back to emergence problems, cybernetics, build to predict better.

To reduce the offset, we must

- renouce to interest

- enhance the model

- admit that it is only probabilist

- look more widely to values

.diacro : on several cycles ; then we can say for instance that this error occurs

very seldom and may be neglected, or that it is cyclical and can be dealt with

as a feature, or predicted and corrected by an ad hoc algorithm.

. synchro, study the bit, its position among others ; similar as for diachro.

Around, we can have algorithms for cost/yield of these enhancements, importance

of this bit for L of the S, cost of model upgrade..

To have a evaluation of "good", S must be able to see that its O impacts

its I. If it is direct, we are back to E.

Then, some kind of "distanciation" to the outside word, and even with

the internal world : S may survey its E

8.27. Yield

(in respect to matter)

The yied is fist perceived in the metric relation between organs and functions. We look here for a first approach, purely formal and digital. It will developped later (see autonomy, etc.) in transcendantal values. Then, in an historical analysis, in the geral law of progress.

Other definition : yield is the ratio functional value of a system (more precisely,

subsystem) and its cost.

It is not mandatory, to study this law and its effectiveness on terrain, that

cost and functional value be measured with same units [Dhombres 98].

Nevertheless, a proper generalization of the law must afford to compare and

combine different technologies. Then we must lookd for as much generality as

we can.

We propose to use, for denominator as well as for denomiateur, the KC.

For the numerator, we will talk of functional complexity. It is the variety

and sophistication of the function of the system, or of the different functions

that contribute to its value. We could join wih the value analysis concepts,

but this methode is seldom formalized as much as we need here.

For the denominator, we talk of organic complexity, id the number of organs and componets and the sophistication of their assembly as well as their elementary complexity.

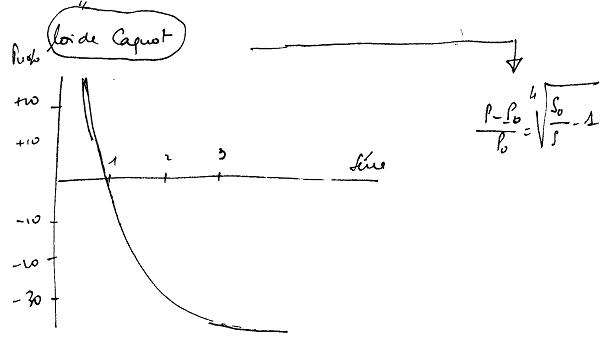

8.30.Grosch

Le rendement augmente comme le carré de la masse.

Voir en sens inverse les effets positifs des réseaux

Mais la loi de Grosch a été conçue pour les calculateurs

des années 70. >Comment peut-elle se généraliser.

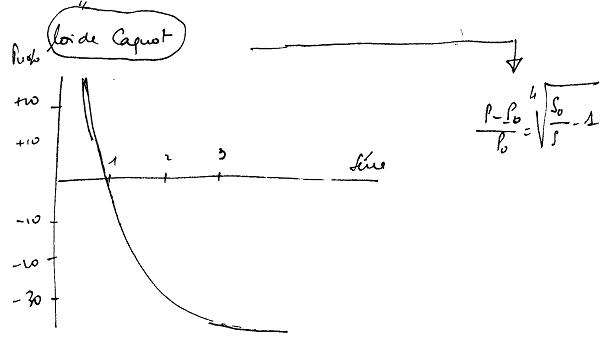

8.31. Caquot's Law

>

>

We could relate the lines not to I/O, but to an inernal com. Here, change the

model of finite automata, and deal directly wih space/time (bit.cycle). We are

near gates, and E becomes a line :

Caquiot's law about serialized production