Language

The transition from speech to written text is not always straightforward. Here, Thomas Aquinatis dictating according to a drawing published in Punch, 1955 c.

Work in progress.

Last revised 9/4/2014. Return to Major concepts. See literature, programming.

1. The central role of language

2. Metrics on languages and texts

3.Terminology and ontology

4. Extension and comprehension

5. Paradigm, theory, system

6. Core words and generation/factors

7. Arts and languages

1. The central role of language

Language is the second digital level that constitutes mankind, over the first deeply hidden in our DNA. It is so natural for us to use it that we forget generally:

- the important efforts we have done during our youth to acquire it, from the basic vocabulary to the orthographic reading, the rules or grammar, the customary courtesies, and for some of us the professional quality of a writer or speaker;

- the fact that it is fundamentally digital, and even binary, as was shown by Ferdinand de Saussure[Saussure] at the end of the 19th century, who sees in it a "system oppositions", in words of today, bits.

Along the centuries, language developed more and more formalized forms in order to afford logical operations to work correctly. Then it took mathematical forms to afford calculation (see for instance La révolution symbolique. La constitution de l’écriture symbolique mathématique. by Michel Serfati, Editions Petra, Paris, 2005).

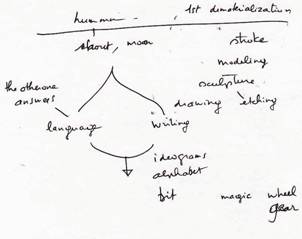

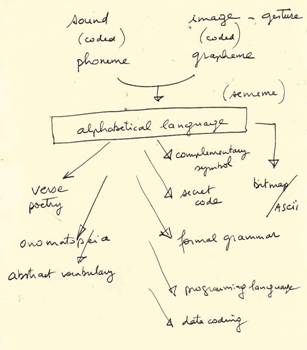

Language emergence, a tentative scheme

Very soon it was perceived that language bore some magic. From the Bible (Firsts chapters of Genesis and St John) to Harry potter without forgetting Ali Baba.

At last came programming, opening the wide horizons of the information and Internet age. And, as we show in our paper form and matter, there is here really something magic, since from a finite object, a program, we cannot generate another finite object, a (pixelized) image for instance, usine a finite object (the computer), but using mathematical functions which continuity cannot be defined without calling for infinity (topologically defined).

The linguistic model is strongly pregnant in art analysis and critic. Saussure is the main reference. But it really took off with the structuralism and the generative grammars of the 1970's.

They are powerful toolsets to create beings, abstract or representative or other beings. Articulated language was created by mankind, but prepared by a progression in communication systems between animals. Languages are constitutive of computers.

A major, constitutive, feature of languages is the fact that they are shared between populations of beings, thus allowing shorter and more efficient communications. This implies conventions, established by trial and error learning (animal and primitive human language), possibly completed explicit standardization efforts (modern languages). Sharing, externalization of the code, works better in digital forms, due to the very small level of errors on copy.

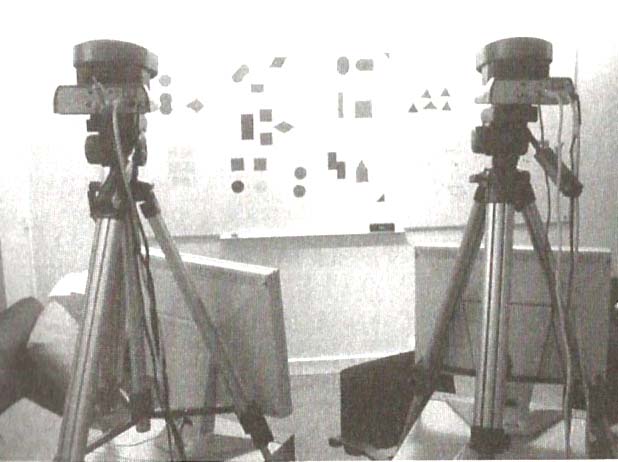

Two robots creating an ad hoc language to describe what they see. Image from Kaplan (Sony CSL).

Languages can be created automatically by artificial beings ([Kaplan 2001]).

Languages have major

disadvantages:

- they are conventional, and not directly intuitive for living beings, then

must be learned, or be constitutive of the systems (if one may talk of intra

neuronal language), or demand on the using machine a specific translation tool

(interpreter, compiler);

- differ from one community to another, then demand translation, which

generally entail losses (even from computer to computer).

But they have an exclusive ability: the reference to signified beings, and still more crucial, the self reference and hence the recursion, which brings finite inside of finite, and nest complexity into what looks linear at first.

However, languages are never totally digital. They always encapsulate some residual analogies, fuzzy parts, even letting aside secondary aspects of speech (alliteration, prosody) and typography (font, page setting) .

The first presentation of Starwars robots used sophisticated linguistic research. But, as far as we know, this track has not been explored further in the series.

Formal languages BNF (Backus normal forms)

Generative languages. Balpe. Generative grammars.

Is Midi a language?

- Gau , 4 pages about relations between digital cinema and language.

A programming language does not contains assertions. Declarative languages are for AI.

Text and image may be rather firmly distinguished each other. An image is a copy of some part of the world. It's a raster. It can be interpreted only by copy or by elaborated recognition functions. On the opposite, text is coded. When a processor gets an image as input, it can only copy it and transmit it bit by bit, unless it makes its analysis mediating an elaborate process. For text, parsing affords operations. But a text/operations mapping works perfectly only with formal languages, and languages "understood" by that processor.

Between text and image, there is the streaming time. Series of images, of symbols.

Basically 3D, but may refer to 3D. or be "abstract" images, without reference to a Euclidean space, let alone physical

Why and how far are text

structures richer than images ?

A written text is a kind of image, with a particular structure.

More or less formal languages

- natural,

- grammar/dictionary,

- prose/verse,

- specialized languages (law, military, poetic, love... mystical).

quantify the formalization.

lenght of grammar dictionaries, alphabets, axioms

Various tracks for language formalization.

2. Metrics on languages and texts

Thesis: A representation may be much larger than the being represented. The scale 1 is not at all a limit. That is true in the digital universe in general but also for representation or the physical world. (For instance micro-photography, medical images, images or atoms...).Example : "the bit at address 301523"

Number of letters in the

alphabet. Of phonemes.

Number of words in the dictionary. Catach

Lenght of the grammar (...)

The question of optima, and evaluation of the different languages (and writing)

For programming languages,

one may count :

- the number of different instructions. Cisc and risc. Relations with the word

length of the target machine.

- the number of data types. Typing power.

- the number of accepted characters (was interesting for the ancient codes,

which did not accept accepts; that has changed with Unicode)

- the size of the necessary interpreter or complier

- the size of machines able to compile and execute them.

The RNIR (French Social Security number) examples shows a limit case. There exists an optimum (or a semi-optimum perhaps) in coding economy. In other cases, we can devise more delicate structures, and probably more interesting. One idea would be, at least in a research perspective, to aim at a methodical cleaning of remaining analogisms in our languages.

Function points (see Wikipedia) and more generally metrics on programming.

See Zipf law. Wikipedia

3.Terminology and ontology

The word. Some thousands in

one language.

How many bits for a name? Directly, 10 characters * 5 bits, around 50 bits for

one word. Which is much more than the number of words...

Structures can be found in the dictionary. Thesaurus, valuations (number of uses, from basic to very rare). Central language plus dedicated languages (medicine, law, divines, computers...).

A definition is a text assigned to a word (sometimes, a small sequence of words, or expression). In formal languages, a word can be replaced by its definition without changing the meaning of the phrase. In other languages, that does not operate correctly. In natural languages, dictionaries are done for human users and are more a sort of intuitive orientation, including contexts and possibly images (bitmaps).

Petit Larousse : Enonciation des caractères essentiels, des

qualités propres à un être ou à une chose.

Signification du mot qui la désigne.

en TV : nombre de lignes subdivisant l'image à transmettre

- caractère discriminatoire, parfois idéologique,

- caractères statistique

- expression d'une stratégie.

Webster (College edition):

1. a defining or

being defined

2. a statement a

what a thing is

3. a statement

or explanation of what a word or phrase means or has meant

4. a putting or

being in clear, sharp outline

5. the power of

a lens to show (an object) in clear, sharp outline

6. the degree of

distinctness of a photograph, etc.

in radio

& television, the degree of accuracy with which sounds or images are

reproduced;

A nomenclature is a sort of

(semi-) formal dictionary. With "record" structure. There is a

typology of P. Berthier (in L'informatique dans la gestion de production. Editor and date unknown. )

- on a technical basis, letters and figures alternating, to give for example

the part dimension

- base on plages/ranges : letters and figures pre-selected to part families and

sub-families

- sequential, incremental (numeric or alphanumeric)

4. Extension and comprehension

Extension and comprehension are defined for terms, which we shall consider here as synonym of concepts. Extension gives the list of occurrences of the concept, or some criterion to find the occurrences. Comprehension groups all the features specific to this concept.

In our "there is that" model, extension may be seen as a list of addresses (instantiations), and comprehension as the type or class. See 9

If we know how measure for

each concept:

- the comprehension (length of the associated text in the dictionary, for

instance ; we could limit ourselves, more formally, to the length of the definition

itself, letting outside the "encyclop edic" part of the dictionary,

like, in Law, the law proper and the jurisprudence)

- the extension, i.e. the number of occurrences of the world in the digital universe (or any world,

e.g. 6 human being billions, one hundred of Gothic cathedrals),

then we have a ratio giving the yield of the concept.

For a given data base, and a given global capacity in bits, the base may comprise a large number of element with little bits (large extension, small comprehension) or a few words with large records.

Extension and comprehension can be taken as a kind of metrics. Extension would be a negative pressure (see below). Google transforms it into a positive pressure and temperature.

5. Paradigm, theory, system

Paradigms and theories are

high level assemblies. A long text, or a series of coordinated words.

Question: how a theory may be used by a being? Expert systems.

A paradigm may be defined as a disciplinary matrix, literally framing research and giving to the scientists gathered under this paradigm "typical problems and solutions", define the study space; draw passageways in the set of possible research". ( La mesure du social. Informations sociales 1-2/79. CNAF, Paris, 1979).

Also: story, narration, saga, assertion, command.

Assertion +

modality

deduction/inference

formal languages

“there is that”

Method, algorithm, description.

6. Core words and generation/factors

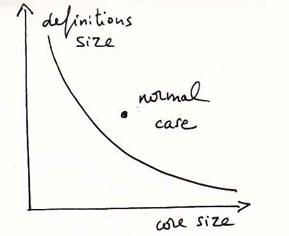

When the core of the dictionary is heavy, the other words are deduced of it with short definitions, and reversely (step by step). ??? In which language.

If we admit that the words in the core are not defined (reserved words in programming languages? ), and the other ones as totally defined using the core words, or still if defined from each other in an intuitive way, of constant length, then we have:

Circles in the core.

If we admit that concatenation is a sort of definition, we can have one only word in the dictionary of the + operator. If we have n words, numbered from 1 to n.

Relation between the size of the core and the size of definitions. The larger the core, the shortest can be the definitions.

There is redundancy, etc. Ex. from a catalogue of works, make the most diverse, or the most representative selection.

Topological problems of a

dictionary. Since a definition in a dictionary cannot be given but with other

words in the dictionary, there are necessarily loops.

Graph theory about that.

Unless some words be given as “axiomatic” , and not to be defined.

Indirect (loop) recursion.

Track to recursion. The best wt: maximal difference. But if there is repetition, other choices based of relations between beings (WFE well formed expressions, grammar). Prime numbers

We must analyze a given

being:

- rules of what it understands (language, WFE)

- concrete environment : what pleases at a given time, for instance the puzzle

piece

- comprehension, the "that" and the details inside E = {x ; x>1}

- extension : list of locations where "that" is instantiated E

={a,b,c...}

If we have all the beings (filling at n.2n), the comprehension is total, and extensions does not add anything. If they are well ordered, the being is identical to its number. The whole space is of no interest.

On the other hand, if the beings have little common parts (in some way, there are much less beings than 2n, comprehension will be but a small part of each being, and it remains free, disposable parts.

Inside a being, several

types may combine.

Reduction of a being: "division" by all its types, the rest bears its

individual features, local and irreducible.

This local part can come from

- locality stricto sensu (geographical location, proximity with a high

temperature S, software "localization")

- other abstract factors.

ex. male gender, more than

45 old, born in

If the type is long very

long abstract description (comprehension)

A few types may be sufficient, with a short code to call them, but a type may

call a long sequence (strong comprehension). In this view, that has no meaning

for the system itself, it has no memory of its own past, of its traces, but it can be

meaningful for other parts of the digital universe;

The bits excess of a

concrete S relative to the necessary minimum for the n.2n beings may

be done

- either canonically, with a bit zone giving a number, possibly split into

several zones

- or, on the contrary, a distribution of the factors over the whole length of

the being.

If we suppose that the system has

been built by a succession of generating factors, it will, in general,

impossible to do the division to get them back (Bayesian analysis, public key

coding).

We consider normally that the "natural beings bear an indefinite quantity

of bits. Among them, we can select some bits for identification (extension) and

description (comprehension). The extension (in locality) is implicitly given by

the position of the being in the universe.

For a given beings

population, find all the factors, or a sufficient generative set of factors.

Let us take a frequent case : a lot more of concrete bits, for instance 500

images of 1 Mo on a CD of 500 Mo. Then, the pure differentiation takes only 9

bits. All the remaining is "local".

Then we can analyZe these 500 images, with equivalence classes giving

generators.

Thesis. To have all, it suffices that the product of the cardinals of classes be superior to the cardinal of the whole. Again, 9 classes of 2 elements are sufficient.

Thesis. More generally, we shall certainly have the fact that we maximize the analyses

effect (root) with generators of same length and equiprobable.

Once given the factor family, we can compute the volume of all the beings if

all of them were generated. Normally, it will be much larger than the actual

concrete, or the available space.

Seen so, concrete is much smaller than abstract: imagination goes beyond

reality.

Are “natural languages” so natural? Progressive emergence. The host community.

7. Arts and languages

See literature, programming.

- Laban language for choreography.

- Sideswap language for juggling (mathematic notation).

DICCAN'S PARTNERS:

Paris ACM Siggraph, the French chapter of ACM Siggraph, worldwide non-profit organization of computer graphics.