A Digital Ontology

Contents transferred into the DU series

Pierre Berger

Foreword : grand narrative and responsibility

Once upon a time, some two billion years ago, the first digital entity entered Earth: life emerged into the primeval soup.

Some millions years ago, another fundamental jump happened, when our ancestors invented the articulated Language, with its deep but clearly digital : system of binary oppositions [Saussurre].

Some hundred years ago, the Modern times drew Mankind out of its static views of the World, and presented History as progressive. Digitization was a major component of the new impetus, from a neatly digital way of writing the numbers and computing, digital slicing of time ("foliot"), the cutting of printing elements into our present "cases" (upper and lower...) up to the philosophical division of problems recommended by Descartes.

In the 1940's, a lot of theoretical and technical advances led to the modern computer systems, and showed how central were the binary concepts and devices, from mathematics to corporation management, including nuclear physics and military applications. Von Ne perhaps the best at tying all these trends into the digital synthetic concept.

In 1953, Watson and Crick deciphered the DNA, and Life began conscious of its intimate digital nature.

In 1965, Moore formulated its law of growth for digital integrated circuits, which has been transposed to all electronic devices. This exponential development law could be adapted to cover all kinds of technologies and to the whole span of World history.

Since then, the digital coverage of the World thickens and becomes more dense each year, tending even to replace the hitherto dominant processes of production, consumption and entertainment by a transfer into "second worlds". These could well be anyway the one and only safe way for the future of Mankind, if they prove able reducing the matter/energy dissipation/pollution problems.

Still more fascinating, for the best and the worst, the two digital tracks tend to merge into one, where bionics unite the digits of DNA (natural life) with those of electronic devices (artificial life).

A main concern is the weakening of all the borders which hitherto protect our individuality. Our body extends beyond skin with the proliferation of "prostheses", but is penetrated by rays, ultrasounds, drugs, chirurgical interventions, transplants and implants. Our soul projects itself to the limits of the universe, multiplies itself in virtual words, but our privacy is little resistive to the commercial, administrative and military information networks. And it will go further with direct link of the brain to the external word, to control it, or be controlled by it. And at the end, the brain to brain, telepathic relation? At present, properly unconceivable.

The commentators of today part themselves in pro- an anti-technology. The opposition is often expressed in dramatic terms, be they optimistic or pessimistic about the future of Human species. That does not prevent a bit of schizophrenia, with persons spending their time on the web to stress the dangers of computers, and others who claim both a simplification of Administrative processes and a strict protection of privacy. This trend is reinforced by the new grand mythologies that have replaced as well the Greco-roman as the Christian doctrine : Starwars and the Lord of the Rings. Here clearly, even when advanced technology is shown, the ideal is clearly put not on progress, but on the restoration a “natural equilibrium”. The “bad” (Sauron, Dart Vador) have the most advanced technology. The “good” (Hobby One Kenoby, Frodon) rely mainly on their “soul”.

Let us try to unify the knowledge fields implied in this saga. Let us try to go further, to show the depth of digitization, the riches of its perspectives and the subtlety of its possible theorization... while aiming to propose, with modesty, some guiding lines into our present and the building of our future.

We shall try to soothe these fears by showing that the digital entities have themselves their intrinsic limits and weaknesses, which we call globally "digital relativity". In some way, the global centralized control imagined by Orwell in his 1984 novel is fundamentally impossible. But we pay the price of these frailties with the ever increasing cost of safety, not to say "sanity" in our systems, with heavy anti-virus and firewalls of all sorts, at the individual as well as corporate and political levels.

Looking further, digital relativity opens also the way to the emergence, in digital "machines" of emotion, meaning, and art. Our Roxame software (among the works of so many "generative" artists or algorists) has begun to show how a "machine" can be considered as an actual artist in its own right, or, symmetrically, as an art critic. So, explode the last bunkers of the "human exception". In spite of many failures, in spite of the still wide power gap parting our machines from our brains, the visions of AI (artificial intelligence) have still their legitimacy. A lot of thresholds have been over passed (win over a Chess great master; propose rather correct translations of much text...). At the same time, the limits of human rationality facing the over-complexity of modern World are but too evident. Then, the coming of some kind of post-human entities, possibly hostile but why not friendly and somehow respectful of us, the proto-humans, as we are of our ancestors, is not so mad a perspective (as Asimov told).

At this point, optimism Vs. pessimism is a rather private alternative. And indeed of little pertinence, as such global ways of thinking lead to simplistic or schizophrenic action and politics. What we call for is more a due combination of creativity and vigilance. As far as we can, as far as the World evolution depends on us.

We shall develop these themes in four parts.

1. The first one will show the generality of forms and structures met in the Digital universe. In that space, with is particular time, beings have their place and their roles. From the basic bits up to the global world, small and large beings develop their structures and co-exist. Some of them are representations, but a major part stands for itself. Among the different systems of assembling bits into entities or larger and larger series, language holds a pre-eminent place, as well for representations as for active beings, through programming.

2. Metrics and values will take the second part, and we shall propose some innovative concepts. Upon a sort of thermodynamic base, we shall consider the now classical, but still disconcerting concept of complexity, and draw the sketch of a method to evaluate the power of digital beings.

The mere problems of these metrics will lead to the central issue of digital relativity, with its multiple sources, including but not limited to Gödelian logics. That will open the space to a concept of liberty for all digital beings, with its preservation and maximization as a general principle of development in the Digital Universe so extending in this domain the classical Minimal action principle. From this central principle can be studied the classical "transcendental" values: true, good and beautiful. We shall start from the good, and consider the true as a particular kind of good, letting the beautiful to the next part.

3. We shall jump to a more sophisticated group of issues with more explicitly cognitive beings, with their operations of assimilation and expression, surrounding a generative/decision core. At this level may emerge the problematics of emotion, and of meaning in its deepest form (from the DU standpoint). Hence a rich basis to look at beauty and art, as far as it may be practiced by digital beings, seen as the summit of development of our digital universe.

4. In the fourth part, we will connect our abstract construction with the "real" world, with its concreteness, its matter and energy, its relativity, and of course its "real time". Hence a look from DU into History, which may be seen as emergence of the digits in physical as well as emergence of matter in the digital constructs. We shall, in a perhaps too classical way, consider mankind, that is We, and I among us, in our particular and - should I proffer that truism - eminent role. But we shall see this role in perspective, not as some end of History, but as a major stage towards something higher, some Omega point to speak as Teilhard... and so conclude on the vast drama that our generations, willing or not, are the players.

5. We conclude with openings on our future, and what we can, and must do to develop it along good lines>.

Acknowlegments

This book has been, I must say, a quite solitary effort. It's interdisciplinary, technical and for some, shockingly anti-humanist stand, had not made easy the dialogue and cooperation. I could not even find understanding in the reflection club I had founded in 1991, Le club de l'hypermonde, which in spite of my efforts, went back to more conventional topics.

I must anyway give thanks to my family for the long hours stolen to them for this adventure, and to my brothers Joël (for his encouragements) ant Marcel (for his precious hints and reference providing).

My thanks go mainly to some other thinkers, whose recent books have been instrumental to develop the present text, from the bases elaborated in my L'informatique libère l'humain, la relativité digitale (L'Harmattan 1999). The book contains an extensive bibliography (I can send it by mail, at cost price, for 20 euros). e

The last decisive one is Mathématiques et sciences de la nature, la singularité physique du vivant, by Francis Bailly and Giuseppe Longo (Hermann 2006). This book is the most recent (as far as I know) expression of their long and deep cooperation, which I first appreciated during a séminaire at ENS (Ecole nationale supérieure), at the beginning of this century. At the level of science they surf on, I confess that part of these lectures was well above my abilities. But, those days, I am not alone to sail by reckoning in the vast seas of modern science. After all, is it not another aspect of digital relativity... From my standpoint, they remain too faithful to the "human exception" thesis, and then under-estimate the importance and positivity of the digital beings.

Another key book was La révolution symbolique, la constitution de l'écriture symbolique mathématique, by Michel Serfati. What he says of the Viète-Descartes-Leibniz intellectual revolution, of the upturn in symbol role in science development came appropriately to feed my search about digital beings autonomy and self development. I tried to push him into my saga, with Renaissance mathematics as a major landmark between Aristotle and Von Neumann, but he resisted my lure into a so risky travel or, actually, not so in line with his own historical feel. Fortunately, I found in Des lois de la pensée aux constructivismes, an issue of Intellectica (2004/2, no 39), edited by Marie-José Durand Richard, a complement on the intermediary work of Babbage and Boole.

At some times, the cause of digital autonomy, mainly artefacts at first sight, comes in conflict with the traditional humanist motto : humanity transcends any artefact. That is a very old debate, of course, from the Golem to Frankenstein and Asimov, from cybernetics to systemics, from Pygmalion to Totalement inhumaine, by Jean-Michel Truong [Truong]. I found fresh fuel for a new push along this way in the philosophical work of Jean-Marie Schaeffer, mainly his recent La fin de l'exception humaine [Schaeffer 2007]. Alas, he does not deal with the man/machine issue.

On the human sciences side, La technique et le façonnement du monde, mirages et désenchantement, edited by Gilbert Vincent [Vincent] shows the negative side of constructivism, and fostered me to answer these concerns with more... constructive views. Interesting ground along this axis has been ploughed by Razmig Keucheyan in Le constructivisme, des origines à nos jours [Keucheyan]. It is a rather detached view of constructivism, certainly not a pro-constructivism pamphlet. One of its main exciting themes is a connection made between various forms of constructivism, notably sociological and artistic versions.

The same Jean-Marie Schaeffer pushed also in this kind of intellectual genetics, in his L'art de l'âge moderne, l'esthétique et la philosohie de l'art du XVIIe siècle à nos jours [Schaeffer 1992]. This last point entered in resonance with my development of the painter-system Roxame, and our reflexions in the artistic group Les Algoristes, which Alain Lioret and myself founded in 2006. His book Emergence de nouvelles esthétiques du mouvement [Lioret] concludes with an evocation of systems "which are based neither only on random, nor on human, not on machine". Cooperation of the group, and mainly with Michel Bret and his neural networks is in presently in progress, and gives force to artistic as well as theoretical new developments, of which this book is, at present, the most abstracted expression.

Let's say some thanks also to Jean-Paul Engélibert for their nourishing compilation L'homme fabriqué, récits de la création de l'homme par l'homme [Engéliberr] which makes readily available in French 1182 pages of great classics, from Hoffmann to Truong, including Shelly, Poe, Villiers de l'Isle Adam, Wells, Capek, Huxley...

Readers may be surprised to see an English text based on such a French bibliography. That may be due partly to the rich life of French philosophical and interdisciplinary thinking of our times. In spite of critics, the "French theory" is not dead, even if it takes now other ways than in the good old times of Baudrillard. As far as I know (but we know so little, nowadays), there is no such effort of thinking global beyond the Hexagon limits...

As for me, with apologies to some of my French readers, who have still some reluctance for the Von Neumann language, I take it as an orientation to the future, which I see as globally speaking, if not English or American, at least some not too broken species of Globish. Perhaps am I wrong here also, and the poor times of the present United States will let the way open to Spanish or Chinese as the most common language. For the time being, in business and science worlds, English keeps a strong leadership. And I think that the best to be wished to French culture is to cut the umbilical cord with my mother language, and not to wait for translators to give the largest possible audience to our ideas.

Anyway, after some 40 years of daily writing in French (mainly as a professional computer journalist), and trying to pay the best homage to my native idiom, I have more pleasure in writing the language of Shakespeare or Oscar Wilde than of Corneille or Victor Hugo, and even of my children and grand-children.

An ambition with such wide zoom aperture cannot avoid some naiveties here and there, and I hope that the reader will show understanding for my shortcomings. Still much better, warm thank to those who will take out of their time to send remarks, to which my email pmberger@orange.fr will give a hearty welcome.

A digital cosmology

0.0. A cosmology

A cosmology has for aim to describe the whole universe, its history and developmental laws. A digital cosmology sees it as an indefinitely large set of bits (each one having at a given time the value 0 or 1. Or equivalently, true/false, black/white). It is a little easier, and rather equivalent (we shall discuss that later), to consider it as a text, a sequence of characters, including, of course, the numerical characters. or a hyper plane of any dimensions.

We could as well use the word "cosmogony" since we give some basic hints to the origins. But such a presentation of the past aims, of course, to its extension into the future : constant law are useful if they are here to stay, and support not only survival but development.

We shall frequently use the

term DU (digital universe). An hypothetical GOS (General operating system)

manages its global operations.

We shall refer to the four Aristotelian causes : matter, form, efficient,

final.

Matter will refer to the basic digital raster. Material objects in the common

sense will be called physical.

This work has for object to help today human beings, beginning with the author himself, to feel better at ease in the present world and its trends. Or, to say more, to enjoy it more fully, lowering his inhibitions without, at the same time, lowering his vigilance about its negative sides and real threats.

For that,

it tries to build a "conceptual frame, tending to unity"

[Longo-Bailly] which could be compared to the cybernetics and

“systemics” efforts in their time. A main common point is to go

past the opposition between human and non human, and to soothe fears that

relativisation of mankind is a threat to humanity. For that, it should be shown

(but we can give only partial hints),

- that something better than the present mankind is not unthinkable, but

difficult to be imagined and demanding, at the same time

. some deconstruction of our binary conception of mankind, without falling into

any kind of radical pessimism

. some elements of constructions of this "better world" which,

paradoxically perhaps, includes a stress on the radically non-perfect nature of

this future; any "perfect system" is from start inhuman and lethal;

"idea kills life" (in the line of Freud through Denis Duclos, in [Vincent]);

on this line, our view may be taken as “consructivist”, as far as a

lot of the terms and concepts are not directly “natural” but

constructs, if not “theoretical fictions”. And so more since

artificial digital beings take such a part in the story ! We take here

“constructivism” in a resolutely positive and... constructive way !

- that the digital systems have not the inhuman and lethal rigidity

traditionally associated with "binary" systems. Here, the

considerations about "digital relativity" are central. But also our

efforts to show that terms like "meaning", "sense",

"emotion" and art ; on this line, the role of art will be stressed as

major ; not to give the artists an exclusive role, but more, in the line of

[Michaux] "art in the gaseous state", that any activity has to become

more and more artistic.

Bits everywhere

In the XXIth century, to view the world as primarily digital is no longer revolutionary. We live, work and entertain most of the time with digital tools or toys. Interconnected through Internet, digital devices take as central a place in our minds, as Earth for our bodies. Then we must do (and too bad if it is only one more, and waiting for the next to come) a Copernican revolution, placing in the centre the digital construct, in other words the set of patterns that machine rise proposes to day to men. If offers us a mirror, an antithesis, an extension, an ally, an accomplice for new steps.

The former views and philosophies of the world neglect or despise the technologies. They still set in the centre the human biped which emerged from the monkey to reach universal power on earth but also in atrocity. For all the philosophers, up to Husserl, Heidegger and their epigones, even Sloterdijk, humanity is central. Even if it is a superman, as for Nietzsche. Or a collectively divinized man, as the proletarian for Marxism.

Extending here the views of Hegel, we follow him in his long way of “Spirit” growth. But we negate his idea that History finishes with man enlightened by the Hegelian philosophy. We also push beyond existentialism, which explicitly puts man in the centre of the world, with his mere non-determination. For we think that machines also, at least since Von Neumann, can be undetermined.

The ideas that we propose here, aiming at a formalization, if not a computation, of all these concepts, cannot pretend to solve all questions, nor to bring a totally encompassing framework.

One of those tenets, expressed in our book L'informatique libère l'humain [Berger 1999], is that the process is hopelessly endless. The digital dialectics has no stop point. Anyway, it would be even more horrible if one wall was reached, (in the Jean-Michel Truong manner). We can at least find some comfort in the digital relativity part of the reflexion : the impossibility of a totally computable work is both despairing and reassuring against the kind of rational dictatorship that it could result in. (a fear formulated for instance by Denis Duclos, in La technique et le façonnement du monde [Duclos].

An important part of DU is its stable part, the bits stored for long term use, a patrimony. But DU is not static. I may be thought as a soup, as the primeval soup where life began, a magic cauldron with powerful bit currents, highly emissive poles as well as pure receptive poles, highly interactive sites... Globally, DU is expanding. To day. It is thence that we can look for the past, the origins, and the future.

We could entitle this work “The selfish” bit, as a generalization, or a deepening, of “The selfish gene” [Dawkins]. Let us note that a bit is altruist by necessity: an isolated bit has no meaning.

Is the bit something subjective ? An “a priori” scheme, along a Kantian view. Or something objective, really observable in concrete, even material objects. The answer is : both. In an ADN molecule or in a computer, the digits a as objective as any other part or feature of the object.

A world that stand for itself

Bits, numbers, texts and bitmaps are not primarily representations of something else, even if representations we primary in the building of the “digital” term and concept. The digital beings are the reality itself. They beings exist and develop for themselves, or for other digital beings. . Some parts of it connect with "matter" or "physical world", but it becomes just a secondary aspect, an historical constraint. The map is the territory, contradicting the classical motto of the General Semantics "The map is not the territory". Or at least, digital is what matters firstly, even if it refers, and somehow demands, a physical basis.

Then the traditional problems of truth, the debates between realists, idealists, nominalists or positivists are left behind. Though, of course, we shall have to consider them at some time, but without the restlessness of Cartesian doubt or the cynical indifference of scepticism. In DU, significant and signified are all bits.

Our view is neither materialist, nor spiritualist. It tends to avoid any "dualism", though this question, even considered as lateral, cannot be avoided.

Digital beings are no more mainly tools, even if computers emerged primarily as computing tools. There is no life without a digital code. And DNA code cannot be taken as a tool for the rest of the being.

But digital beings cannot stand alone. They need some material, physical basis, some “incarnation”. Like the ADN nucleus in the rest of the cell. And we do not pretend that DU “is” the real universe, but only that it is a pertinent and useful part of it, or projection of it on some “epistemological plane”.

0.3. Epistemological stand : post-post modern

Since digits entered into existence well before men, and will perhaps survive manking, our view can be taken as “non humanist”.

We wont start from man. Following part of the cybernetic and systemic view, we can say as [Bertalanffy] (1966 for the French edition) "It is a essential feature of science to desantrorpomorphize progressively, in other words to eliminate step by step all aspects due specifically to human experience".

But a digital view or the world is not incompatible with human, even mystical, view, as the great introduction of St John gospel prologue "In the beginning was the Word".

Here man is an aim, an ideal to build, or to go beyond. Not a datum, let alone a pre-existing nature that we should try to find and to reach. We have not to "become what we are" (as said Nietzsche after oriental philosophers). We are not, worse again, a lost pristine nature that we should restore by our own strength or the mysterious intervention of a Saviour or the heroic deeds of Frodon or some Jeddai knight. We have do become what we will, and for that to design consciously what we want to become, even if that cannot be a nonsensical fantasy.

After such bold and immodest pretensions, let us show some basic limits of such a cosmology.

- Our model refers to a global DU, and its operating laws and processes which we shall call GOS (General operating system). Such a general entity bears contradictions, as "the set of all sets".

- On the same vein, our cosmology aims to a most general law of universe (at core, the L maximizing law), when precisely proposing a "digital relativity" which excludes the possibility of such a global views.

- Human conscience, and related concepts like emotions, are at present far beyond what we describe. Then part of the work must be taken as a rather simplistic model, a metaphor more than a real explanation. Nor must we push too far the metaphor laid out in the preamble, and imagine DU as a conscious being pushing its interest. That may be useful, or motivating at some times, like God for the pious, or Gaia for some ecologists.

Post-modernism could deter us from such ambitious views. But postmodernism also finds its limits, and the selfish claim for freedom may crash on a lot of “walls”, mainly perceptible today under ecological mottos. Somehow, then, our approach may be taken as post-post-modern.

In spite of these limits, we would not have undertook such a writing without the conviction that it may help to better understand the world of to day, to contribute positively to its development, and to enjoy it without guilty feeling.

PART 1. BIT, THE DIGITAL ATOM

1. The bit, atomic but clever

1.1. Digital and binary

The definition, and crucial quality, of the digital universe, is that it is made of bits.

So, it can be seen as a set of binary values. The most simple view is an indefinite string of 0s and 1s along the numeric scale of integers (N). Or a 2D or 3D raster raster bitmap image. Or, more formally, DU is {0,1}*, the infinite set of 0,1 pairs, and still more exactly this set as connected to the real world, “incarnated”. In nature as in artefacts, digital beings never operate in total independence of matter. In the reproduction of living beings, the combination of genomes will give a new being only through an epigenetic process which is not totally digital, immaterial.

Interesting structures operate at a higher level : molecules more than atoms, genes more than DNA pairs, words more than letters...

Note that "digital" is not synonym of "numeric" as implied by the French translation. See the "representations" chapter.

Digital and binary are

practically taken as synonyms.

A digit may be a number (from 0 to 9). It could be also taken as any

“key” (which we press with a finger, digitus, in Latin). But, since Von Neumann, binary is recognized as optimal.

1.1. The bit, mathematical, logical...

The bit is solid, robust and perfectly atomic in the etymologic sense of unbreakabilty. There is no thinner nor smaller logic element than the bit, no smaller information unit , an no smaller element in decision and action.

The bit is also polyvalent; one could say "plastical" or “pivotal”, as well for abstract mental constructions (logics) as for physical devices.

Is the bit an optimum from

a logical standpoint ?

- a ternary logic would, for some experts, be better, since the mathematical

constant e is nearer to 3 than 2

- in some electronic devices, or parts of devices, a "three states

logic", the third state being somehow neutral, is more efficient

- modal logics have attractive aspects that not always reduce to binary (modal

logics ... )

- a bit is not the least possible information quantity ; its proper value is

reached only when the two positions in the alternative are equally probable ;

if one out of the positions is more probable than the other, the informational

quantity decreases, and vanishes when one position is certain.

Some other considerations :

- is a bit is a particle, and not an atom ?

- a bit is a kind of balance, a dissipative structure (a priori in DU, and on

full right in the physical universe)

- bits may be seen as electrons running through logical gates, which are stable

structures of nuclei which determine the flows; hence, immediately, the

irreversibility of logical functions

- a bit may be seen either as a “cut”, the transition between two

spatial regions ; or as

“pixel”, a small region

- a bit “exists” when it is located, either

“materially” (a geographical position on Earth, a place on a chip)

or “digitally” (a digital address in some address space) ; then,

the fundamental assertion in DU : “there is that”

1.2. One bit, two bits...

A bit alone has neither meaning nor value by itself; it is not even perceptible. at limit, a sole bit does not even give the direction of transfer, implied only by the transmitting device

Nevertheless, the mere existence of a bit “means” at least an elementary optimism. If there is a bit, that implies that somehow order is greater than disorder, structure more powerful than chaos. At least locally. From the most elementary level, we can then subsume the presence of a system funding values, yields, operational capacity, and at least a difference versus indifferenciation.

But that, by itself, places the bit inside of a system which lets appreciate it.

A new bit is cut, a new being is borne. At some moment, the causal vice loosens. A new complexity level is reached, with potential DR for undetermination and recursivity, letting appear a new autonomous being.

1.3. Discrete and continuous

Could this DU model, and under which conditions, build the continuous ,

In a large measure, discrete beings may be processed continuously. For instance, cookies bore by a rolling chain during the baking phases. Reciprocally, continuous products may be "discretized" for processing (batch processing in chemical industry or information systems).

The term "transaction" says, generally speaking, that a batch of operations is applied to a batch of something, often by an exchange between two processors. This term is used mainly for money and information. Formal atomicity rules have been expressed for a correct and fail-safe transaction processing (double commit, Acid rules).

Continuous and discrete are never totally separated. "Every morphology is characterized by some qualitative discontinuities in the substrate" (Mathématiques de la morphogenèse [Thom]). In other words, digital is always present, even in analogical systems (dissymmetry?)

“Inside” the DU model we can build the real line R, to find again the continuous. This construction cannot be done "directly", since it supposes infinite somewhere. But a way through formal, the infinite potentiality of a "meta" level in regard to the inferior level. Projective spaces ? Hence, one could finish up on analogue computation, if there is still something to work out of it (not totally, see Bailly-Longo).The cut in R real line construction is dual of the fundamental N cut. It is their combination which gives this impression of "total" recuperation of the real.

Continuous beings, and matter itself, emerge only by irreducibility of logic forms (square root). Matter (or animality) is what supports keeping on, bridge between, waiting for (let us hope) a more subtle formalism which re-establish continuity, or even better logical coherence.

Physically, continuity is an illusion (quanta), or better a construct, no more "natural" than digits.. Continuous functions are only approximations to express the large numbers. It is built by the brain. And is a sort of necessity to think as well as to act.

A continuous function is continuous in respect of the being it describes, but nevertheless is discrete in its expression (text). It draws a bridge, symmetrically to physical matter, thought as an infinite base for bits and quanta.

1.4. Digital and analog/analogue

The distinction applies to representations (here analogue is more frequent) as well as to devices and specially electronic circuits (here analog is more frequent). At first sight, the distinction seems perfectly sharp and clear. In practice the border may be fuzzy.

The digital/analog and discrete/continuous oppositions are not orthogonal. Analog is nearer to continuous, but differerent. But digital is necessarily discrete.

1.4.1. Representations

Thesis. An analog representation

- is continuous (if the represented entity is continuous) and

- is obtained from the original being with some kind of continuous function:

optical transfer, electrical value transformation, etc. .

A digital representation is

built by three basic cuts:

- the representation is not the represented being: the map is not the territory

- cutting the representation into bits

- the representation structure is not (or at least, is not necessarily)

homothetic to the represented being. (Analogue/digital). This last cut is what

makes the difference between analogue and digital. (better words should be

found).

Typically, the difference is illustrated on a car dashboard, with needles on dials (analogue) opposed to digits (here, numerical digits)

In most cases, the border

is not so sharp.

- digital representations, at high resolution, appear as continuous and analog

- for ergonomic or aesthetic reasons, digital data are frequently presented in

analogue form

- quanta theory

The difference between analog and digital representation lies in the mapping of being structures into bits. Analogue mappings transfer, more or less, the spatial relations of the beings into the arithmetical succession of bits. Digital mappings use the arithmetical succession as a support to any kind of relation internal of the beings. That is the basic addressing system. A digital representation is always, more or less, coded. A 2D raster demands some definition of lines and rows.

Example: on a random sequence of 0s and 1s (not too compressed, let us say one among ten), density computation at one point, more or less thin, we get a continuous function using interpolation or smoothing.

Kinds of analogies

- myth

- anthropomorphism, and in robot design

- logical inference as analogue of a process

- iteration as an image of the wheel.

1.4.2. Devices

>Devices may be mechanical or electronic.

Electronic circuits are assemblies of gates.

Analog circuits. See definitions and catalog of circuits on the web.

Circuit

schemata : analog (left) digital (right)

An analog computer (that existed until the 70's) uses such functions.

Some sayings of the Futurists :

Analogy is nothing else that the immense love that ties distant things, apparently different and hostile. Using wide analogies, this orchestral style, at the same times polychromic, polyphonic and polymorpphic is able to embrace the whole of matter life […]

The analogic style is then the absolute master of the whole matter and its intense life... To wrap and hold the most fugacious and unhandable aspects of matter, we have to build nets of images or analogies, an to throw them in the mysterious sea of phenomena.

If we want enter in communion with the divinity, high speed running is indeed a prayer. Wheel and rails holiness. Kneel over the rails and pray to the divine speed. High speed drunkenness in car is drunkenness where we feel merged with the one only divinity. Sportsmen are the first catechumena of this religion. Probable destruction, for soon, unavoidable, of homes and towns. Elsewhere will be built large meeting points for cars and airplanes.

Mathematicians,

we call upon you to love the new geometries and the gravitational fields

created by masses moving at sidereal speeds.

Mathematicians, let us assert the divine essence of random and risk.

Let us apply probability computing to social life.

We shall build futuristic towns, design with the poetic geometry.

(Marinetti was aware of Riemann works and General relativity)

On the last point, he does not seem more delirious than Simone Weil seing in mathematics a proof of Gods existence.

About the

Chevalley/Zarisky dialogue

- they start from language, then “I mean”

- it is not directly ontological

- what is interesting after that : what shall we do with that, what can we modify, play with…

- the geometer can move, deform (topology), dip into a 3 dimensions space

- the algebrist can write some form for f, an any thinkable form. Let us note that, seemingly, Chevally chooses a two dimensional space.

Then, what will do the computer scientist for « I mean » ? Bézier ? Write some algorithmic lines ?

And the neurologist ?

1.4.3. Life

Thesis : Life is partly analog, partly digital.

1.4.4. Any object is “hybrid”

Thesis. No being is totally digital, nor totally analog. There remains always some analogies in the coding, the code structure, etc. At least in the ordering of its bits, which are “analog” to its structure.

For example, a digital image is digital for the coding of the pixels, but the raster organization of the pixels is analog to the space of the representation.

1.5. Sampling and coding

Digitalization of representations has two complementary sides : sampling and coding. For pictures, they become pixelization and vectorization, which can be traced far back in art history as pixelization and vectorization. We could call the former “material digitalization”, and the second “formal digitalization”. This opposition is rather parallel to the analog/digital opposition.

Digitalization of images 1. Pixelization. From left to right (extracts) : Byzantine mosaic in Ravenna (6th century), pointillist painting, “Le chahut”, by Seurat (1889-90), and “La fillette électronique”, by Albert Ducrocq with his machine Calliope (around 1950).

Sampling/pixelization: Byzantine mosaics deliberately use a sort of pixel, tesserae, to create a specific stylistic effect [1]. The impressionism, and even more neo-impressionist pointillism have scientific roots. Computer bitmaps emerged in the 1970’s, with forerunners such as Albert Ducrocq [2], handmade paintings generated by his machine Calliope (a random generation electronic device using algorithms for binary translation to text or image).

This form of digitalization stays more “analog” than the vectorial one. The “cutting” operates at the representation level itself. The conversion requires comparatively few conventions, but for the raster size and the color model. Within precision limits, any image can be pixelized, and any sound can be sampled.

Digitalization of images. 2. Vectorization. From left to right: Guitar player, by Picasso ,1910, Klee tutorial 1921, Schillinger graphomaton, around 1935.

Coding/Vectorization is a more indirect digitalization, since it creates images from elementary forms, as well as from texts, grammars, and the particular kind of text that is an algorithm. Here also, roots go deep into art history. An important impetus was given by cubism. The Bauhaus tried hard on this way, see for instance Itten [3] for color and Klee [4] for patterns. A first explicit vision of vector automatic image generation was given by Schillinger [Schillinger]. Incidentally, Schillinger was mainly a music composer and compostion teacher, and of course, digitization and music went along similar ways.

In music, the main coding system today is Midi.

The cutting operates not directly on the representation, but in language objects. The conversion requires more or less detailed conventions. It may be hierarchized and takes finally to language. Any analysis (and symmetrically, any synthesis) may be considered as a coding (and possibly decoding).

Not every representation (picture or music) may be appropriately coded, or it may imply considerable loss, specially if the code is used to generate a “copy” of the original.

The two ways of evolution have merged in binarisation in the 1950-60’s, as stated in the seminal paper of Von Neumann et al. [6], “We feel strongly in favor of the binary system”, for three reasons :

- hardware implementation (accuracy, costs),

- “the greater simplicity and speed with which the elementary operations can be performed” (arithmetic part),

- “logic, being a yes-no system, is fundamentally binary, therefore a binary arrangement… contributes very significantly towards producing a more homogeneous machine, which can be better integrated and is more efficient”.

What about the

"analysability" or "divisibility" ?

- in DU, any being is fully divisible into bits (and most often than not, for

practical purposes, in bytes)

- sometimes a being may be fully divided by another being, and then considered

as their "product". Examples

. a text (in a normal language, natural or not), is the product of the

dictionary times a text scheme

. a written text is the product of a text scheme, a language, and graphic

features: font etc.

. a bitmap with its layers (R,G,B, matte...).

1.6. Concrete/abstract

The concrete/abstract distinctions stems from the basic assertion scheme : there is that.

The concrete object is :

what is there.

The abstract object is : what is that.

“That” is a term, or a concept. It has (somewhere in DU) a definition or a constructor.

The extension of

“that” is the set of concrete objects corresponding to that

definition (or so built). Or possibly the cardinal of this set.

The comprehension of “that” is the definition. It is also a DU

object, then may be seen as a string of a definite number of bits.

Thesis : the definition or

constructor does not, in general, describe or set the totality of the object,

everyone of its bits. The definition may be just a general type, of which a lot

of bits will be defined by other factors. An object may be recognized as

pertaining to a type, since it has the corresponding feature.

Among other consequences, that lets space to actions on this object by other

actors or by itself.

If a concrete object is

strictly conform to its construction, it may be said “abstract” or

“typical”, somehow, or “reduced”.

“Reductionism” is the thesis that the whole of DU may be obtained

from its definitions.

The ratio of “type defined” bits over the total number of bits of

the object could be taken as a measurement of its “concreteness”.

Quotations : An abstract system is made of concepts, totally defined by hypotheses and axioms of its creators (Le Moigne 1974, after Ackoff) . Abstract beings have a limited and definite number of psychemes. (Lussato, unpublished1972)

Thesis : DU itself may be defined, but of course very partially. That may be controversial. The address of DU is somehow the “zero” address.

Thesis: When comprehension grows, extension decreases, since there are less and less objects corresponding to the definition. It comes a moment where there is only one object corresponding to this definition. For instance “world champion of chess” has only one object at a given time. Or there can exist no object answering the definition, even if it bears no contradiction.

Thesis : If there is only one object, if the extension is 1, then one can say that the abstract and the concrete object are the same. (to be checked).

There is a kind or

symmetry:

- when abstract grows, concrete emerges out of it

- when concrete knowledge (measure) grows, abstract must become more finely

cut, then more massive.

An example of concrete beings emerging from abstract descriptions : segment one (from the "one to one" marketing language), "the one who...". . Evolution of information quantity about a consumer. At start, the customer does not even exist in the information system. It records only anonymous (or difficult to group, as with insurance contracts) operations. Then there is growth not only to the individual customer but to its environment.

See identity : what makes an object unique.

Absolute concrete may be taken as a sort of limit, of what is accessible by all beings in DU (and possibly without conventions ? )

Thesis : Codes without redundancy entails hierarchy loss. Ant a wrong bit spoils the whole system, if there are non longer stronger and weaker beings.

Note : a lot of developments here can be found in the literature about Object Programming. See in particular [Meyer].

1.7. Natural and artificial

These concepts are controversial. We take here a stand adapted to our DU presentation.

Thesis : digital is not a property specific to man-made objects. A bit is natural or not. The opposition natural/artificial is orthogonal to the opposition digital/analog.

Artificial beings:

- are synthesized by living entities (though generally not with a clear

anticipating view) or by other automata?

- can mimic natural beings, but lack some or other aspects of the real natural

being;

- may described in terms of functions, aims, adaptation;

- are often considered, in particular at design time, in imperative terms more

than descriptive [Simon, 1969].

The natural beings are the non artificial ones. a being may be said natural if one does not know any DU being that has made it "consciously". That is generally easy to say of non living beings found in "nature", as stones. Living beings can be considered as natural since the origin of life is unknown, as far as the biological reproduction is compulsive and not really controlled. On the other hand, we shall call "artificial" beings that result out of a "conscious" activity, such as bird nests or beaver dams, and of course the products of human activity. This distinction is problematic, as well as the word "conscience" itself, which is generally admitted as a mainly human feature, somehow shared with animals, but not at all with machines. Does technological progress leads us to conscious machines and to a merging of natural and artificial world (or, it may be also said, as a complete "artificialization" of the world) ? That is an old issue, which emerged mainly in modern times, but has very ancient historical roots.

As long as life is so

different from non-living existence, we may generalize "artificial"

to all artefacts due to living beings, at least if they are external to their

bodies, relate to a sort of "conscious" activity.

So, we call natural all beings not

explicitly made by living entities, including these entities themselves, as

long as they cannot reproduce themselves by explicit processes.

However, artificial entities can reproduce themselves explicitly, at present

only under the elementary form of virus. Then virus and their productions may

be considered as artificial. If some day, and that day will perhaps come soon

(or perhaps never), mankind succeeds in creating life from non living beings,

the border will lose its present radically impassable nature. On the long term,

men would be able to make living beings, and robots would be able to make human

beings...

Thesis. DU globally taken is natural.

Quotation : The artificial world defines itself precisely at this interface between internal and external environments. Simon 1969].

1.. DU and its parts

About addresses

Ideally, with DU taken

as of only one dimension, the address could consist only with the number of the

first bit (possibly first and last bits). That would be absolute addressing.

In practice, DU is parted in various subspaces and we use “relative” addresses, with a hierarchy or addresses inside, for instance the directories and subdirectories in a disk or a web site.

Thesis : The

smaller is the subspace, the shorter can be the address.

We have had an example with the phone numbers. At the beginning of the XXth

century, a Company could just mention “telephone” on its

correspondence sheet: human operators could find it directly from its name.

Then, for convenience as well as automation needs, numbers have been used. For

instance, in a small town : 67 (if you called from another town, you would add

“69 at Lasseube (France). Then the number has been enlarged progressively

to

00 335 47 32 18 67

Thesis : Within the same (sub)space, relative addresses are longer than absolute ones. There is a sort of gap (here, "excess" of code) in order to access to an individual, and to describe it. For a population, the minimum is the logarithm of the population. For the French social NIR (security social code), 1 38 08 75 115 323, that is 1013, of 6.107 inhabitants.

At the other extreme from the bits, we can consider a global digital universe, DU taken globally. That is perhaps unrealistic, and more sensible to consider that there is a plurality of digital worlds, separated/connected by analog/physical regions...

It could be useful to

distinguish in it:

- a general memory, or general bit raster (DU itself)

- action and management rules and operators (Global operating system, GOS)

- ways of ensuring communication between beings...

For life, there is no such raster, and it uses the laws of matter to effect these functions. See architectures.

More generally, see structures in chapter 7

Globally, DU is necessarily auto referent. Parts of DU refer to other parts. And some parts refer to themselves. (a map of addresses contains necessarily the address of the map ?). In a first analysis, probably, auto referent parts should be put aside.

GOS functions are, or may

be :

- general clocking ; that is not necessary ; processes could go asynchronous,

or synchronize without any global intervention (communication protocols)

- laws operations (and "enforcement"), security ; that could be

mainly local ; at the global level, ownership of territory is ensured by the

underlying matter ; the more digital the world, the more "soft" is

the assignment of locations inside DU as well a in underlying matter ;

- noise generation ; normally, it will not be properly assigned to a DU

processor ; but why not;

- communication between processors ; here also, roles may parted between matter

and more or less general processors

- controlling the operations of non autonomous beings ; that, de facto, is more

the task of local beings ;

- insure the reproduction of DU upon itself at each global cycle, if we retain

this kind of model ; this point is near to metaphysical.

GOS may be seen as the DU's God. It this case, it must front the paradoxes of

the creator, of the rational God of natural theology, and every self-referent

being.

We shall now use frequently the term "material" to mean the location and disposition of a being in DU, opposed to ifs "formal" features. When referring to the material universe of common sense, we shall call it "physical".

Dimensions

The number of dimensions (mathematically speaking) has not here the radical implications which mark the physical universe. DU is at first sight rather indifferent to dimensions. As well one dimension in all, as one dimension per bit. . Genome is one dimensional for its basic structures. 2D is of frequent use for chip design and for representations, because our vision is so structured. More exactly, it is frequently 2+, or 21/2, with the use of layers (chips, raftered images) or of compacted perspective for maps, for instance.

The dimensions are

important when being have relations, then must be defined :

- independence of subparts

- difference in metrics.

Unless otherwise stated, DU may be thought of as a one dimension bit string.

The space is indefinite, potentially infinite. Infinite may be called for by a loop without stop condition, but of course we never get there by a finite number of cycles.

There is no proper zero, anyway

Addresses

1.9. Varia

Clever and selfish ... These words are anthropomorphic. But, as with Dawkins it may be an efficient scheme, a useful “theoretical fiction”.

Possibly a double way of bits into matter :

- a small surface into a raster (e.g. pixels, voxels)

- a partition of a space into two parts.

Bits and infinity : through recursion.

3. Beings

3. Beings

To make us an idea of the

global structure of DU, we cannot avoid to use more or less metaphoric

reference to the world of today, with its two families of digital beings:

living beings and digital machines. Our eyes show them as a population of

entities, materially grouped in bodies and machines, partly connected by wires.

What we do not see are the net of "light" connexions

- by light and sound, which have been the major communication media of living

beings for long,

- by electronic waves, which began to operate with the wireless communication

systems at the end of the XIXth century or our era,

- chemical by odours or enzymes,

- by social relations between living beings.

If we zoom into these

beings and communications, we shall find smaller elements : computer files and

cellular nuclei, records, texts and genes... and, on our way down, just above

the final "atomic" bit level, a general use of standard minimal

formats :

- "bytes", i.e. sets of 8 bits (or multiples). That allows to point

easily on series of useful beings, as numbers or alphabetical characters, or

pixels.

- "pairs" of two bits in the genes. .

All these beings and their communications are in constant move. Their distribution change and their communication rates increase.

The basic grid. When DU is large, the grid is "deep under", but must never be forgotten (see Bailly-Longo)

3.1. First notes

This part could be taken as well as a kind or metaphysical ontology, or a semantic study of ontologies (computing field) or a development on object orientation. The terms are “constructs” more than observations.

A being is basically known by other beings who have its address. This one may be soft. But we must never forget that the being, to be real, must be instantiated somewhere materially.

A being may have an internal structure, but if the being does not include a processor, then this structure is meaningful only for other beings of sufficiently high level to “understand” it.

A being may have internal addresses.

Seen from outside, a being

is a product by an address and a type, or class (that implies libraries of

types or classes).

The address may be managed indirectly by GOS or an appropriate subsystem.

The type could be just "a bit string".

A being realizes a sort of coherence between the 4 causes. S has the necessary matter, the proper form to realize its finality and proper input (efficient cause)

Then the yield will be sufficient, and the being may live long, because this conformity of finalities, coming frequently from the environment, gives hope to receive the means of subsistence

The existence of a given being is defined from an external system. But we shall see that things will become more complex with large beings, which take in charge by themselves (at least some aspects of) their existence and the preservation or their borders, without removing the need of a final reference to GCom.

In some measure, the internal structures of a being are controlled by the being itself. A self referent being exerts a pressure upon itself, keeps itself together.

3.2. Basic binary beings

But for ulterior rules, beings are either distinct, or one is contained in the other. There are no "transborder" beings, nor "free" bits in a being (this point could be discussed: we suppose that all the bits between the addresses belong to that being. Adapt that when there is more than one dimension).

Internal addresses may be masked to external user beings. Like in OO programming.

The limits of a being may

be defined :

- the limits of other beings,

- implicitly (?), materially, by the characterising of the supporting device,

for instance the organization in cylinders and sectors of a hard disk (or more

soft ? including the word length)

- explicitly, by a parameter contained in the being (header and giving its

length ; that supposes that the user of the object knows how to interpret this

header ; if the being contains a processor, this latter may define the length.

How are defined the limits

of a being :

- extension (interval in D1)

- formal formula, more difficult if many dimensions)

Anyway, the limits of the beings must be known by GOS and the other beings in order to protect it against destruction by overwriting.

An important point is that the metric qualities of a being, in particular its mass, change radically its features.

In particular :

- a rather large being generally (some million bits) contains elements

describing itself (header, at least)

- anyway, if the being is large, its type may generally be recognized

from the content itself.

Thesis : the larger is the being, the more it is self-referent. Would it be only for efficiency effects. Typical example : header of a file, descriptive parts of a computer mother board and operating system.

Question : is there a minimal (digital) size of a object for even a minimal kind of self-reference ?

Let us now try to make a classification of beings, mainly in order to set a basis for a metrics.

Data, memory, representation, motor (clock is the only real motor).

3.2.0. Attraction

What holds the bits together? Here the four Aristotelean causes work rather fine. But evidently for DU itself globally.

Materially. The general robustness of DU. Plus the material connection of the bits. To begin with as connected regions, materially. The hardware hierarchy: gates, chips, cards, frames, farms, networks, The Net.

Formally. That is mainly given by the formal definition of a being, its type and description, which is external to the being for the small ones, more or less internal (header) for larger ones. Form may include quantitative relations (format, possibly "canon").

Function, of finality gives hopes to survive if somebody finds it useful. We shall talk later of "pressure", or ranking, which are somehow measurements of the functionality or utility of the being.

Efficient cause concerns more the changes of the being than its existence, but for the initial move: the creations of the being.

3.2.1. Organ, function

These concepts apply to a being seen from outside. This point matters in particular to express the Moore's law, and more generally the yields.

A being may be analyzed top down by analysis, as well functional as organic; at some times they coincide, and a function corresponds to an organ, and reciprocally. But sometimes not.

The organ/function distinction comes from observation of living beings (anatomy/physiology). It has been applied to information systems design (functional then organic analysis).

3.2.2. Material features

Seen from outside, or from GOS, seen as an "organ", a being is mainly defined by the space it occupies. Sometimes an address may be sufficient, but in other cases a more elaborated spatial description may be necessary.

In the physically biological world, an organ is first identified by some homogeneity of colour and consistence, which will be confirmed by a deeper analysis, showing the similar character or these cells. It is frequently surrounded by a membrane.

We frequently read that an organ is built bottom up. But that is probably inexact, or at least imprecise. A body dissection or a machine dismounting will show organs top down!

The relations with the rest of the system are primarily material, space vicinities. But also various "channels", be they neural or fluid conduits.

3.2.3. Functional features

A being is also defined by

its function, a concept near to the "finality". In programming, as

well as in cellular tissues, functions refer frequently to type (epithelial

cell, integer variable). The bit is the most basic type, with no specific

function, unless explicitly specified (Boolean variable). . See OO programming.

But the type may be very general: bit, etc.

A function is generally defined top down. May be defined bottom up by

generalization.

In abstract systems, it is

tempting to think that descriptions can be purely functional, when in concrete

ones; there is never a perfect correspondence between organs and functions. An

organ may have several functions, and a lot of functions are distributed among

several organs, if not in the whole system.

The "Overblack" concept

As a form of exploration,

we could try to specify a machine which would maximize the non-isomorphism

between organic and functional structures:

- each function diluted in a maximum number of organs, if not all

- each organ takes part in a maximum of functions (cables and pipes included?)

Orthogonality ?

3.3. Passive beings

At first look easy and intuitive,

this concept is not so easy to be defined formally. As soon as we have O

depending on I, and differing of it, S does a "processing". There are

limit (or trivial) case of passive beings/processors:

- the pit: it has I but not O (or possibly not related to I)

- the null processor: O = I

- the quasi-null processor: O are nearly identical to I.

A being is said passive if it does not change otherwise than by external actions (I) (?)

Symmetrically, some case of

active beings:

- pure source: no I, but O

- O radically different of I, with a real processing depth

- a large time liberty of O emission in respect to I.

We can go farther, structurally. At some times, S may decide to be passive,

while remaining largely master of itself.

A passive being has no evolution between cycles. It is a pure address where

other beings may write and read. There is no protection but the protection

afforded by GOS and other interested beings.

Nota: A message is considered as active if the host lends him the handle (that has no being for a two bit processor). The active side may be determined by the physical character or the host (said passive against soft...)

Kinds of "living"

- being-processor-automaton. IGO Input, genetics, output

- representations processing (assimilation, expression, dictionary, language)

The clock is an important sign of activity. But a being could be active using the clock of another one, or the GOS cycle...

How can a clock

be recognized from outside?

If S' has a cycle much shorter, and observes long enough, it can model the

values of the clock bit, and assess its frequency and regularity

of course, this clock could be controlled by another S

If S' cycle is longer, it could perhaps detect and assess the clock on a long observation time,

3.4. Operator/operand/operation

In physical machines, the distinction between operators and operands is not a question. But, in digital world, "operate a representation" and "represent an operation" could be strictly synonyms. Then there remains no difference between operators and operands, programs and data, etc. Not only is the informational machine reversible (as the perfect thermal machine), but there is reversibility between machines and what they do machine!

Nevertheless, in the

general case, we can note:

- difference in repetition; the operator does not change at each cycle of

operation; in DU, normally, there is no wear. in some way, an operator

"massifies" always the being it processes..

- an operator is supposed to be heavier..

Note. The fusion between operators and operands demands that a limit has been reached where it is possible. beings must be light enough, de-materialized, to follow the move without delay nor deformation; and operations, through sensors and actuators, must be reduced to an operation on digital beings... But, moreover, the general digitization was a basic requirement.

When you enter an lift and push a button, your gesture may considered as well as an operation on a representation (the stories, from low to high), or a representation of your intention: the story where you want to go.

One could say that a basic dissymmetry remains : the initiative comes from me. But it uses the initiative of the lift builder...

3.6. The basic "agent"

We give a particular importance to a basic "agent", inspired from the finite automaton, which has a good side : it is widely studied, not only by automaticians but also by logicists and at large by digital systems specialists. See the book of Sakarovitch.

3.6.1. General principle

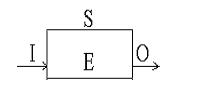

The description that we give here calls for a deeper one, in order to link it to the general theory of finite automata. With its inputs outputs, internal states and operating cycle. We shall frequently abbreviate with S.

(That scheme could be adapted to the four causes scheme, with efficient cause as I (which lets in E and its function a auto-mobility), formal as type, material as implementation (in the DU raster) and finality as output (that is only partial).

Materially, an automaton is a binary being, with its beginning and en addresses. Moreover (applying the finite automaton structure), we may part it in three regions, I,E, O. The O region groups the bits to be emitted and the addresses. In the computations, we will admit the I bits are in the beginning of the being and O bits at the end.

All beings internal to a processor communicate with the external world only through the I and O zones (or ports) of the containing processor. Then a processor defines firmly an internal zone and an external region. And the more a processor is developed, the more it controls its ports. More firmly, but also more intelligently (open systems).

A being is seen from outside (image) as a part of DU, with a succession of I (non state region), E (enclosed region, not accessible from outside) and a region O with its states accessible from outside, either at any moment or an intercycle moments (synchronous model).

The agent operates by

cycles. Within each cycle, each processor operates according to its internal

laws. Then, transmissions occur between beings. In that elementary model, we

consider that : :

- the cycle duration is sufficient to let all internal operations take place,

- there are no communication limits between beings, bur for distance limits (see

below).

3.6.2. A minimal model

Let us try to elaborate a minimal model of "core" for an automaton, with B = clock plus the constant part, unchanged, or possibly copied from cycle to cycle. B may considered as the program running the automaton, or the parameters of its class. B includes the e and f functions, of pointers to functions that can be external. Somehow, the operating system.

By construction, as long as this automaton remains itself, B is constant, but E changes accordant to the inputs I.

We say nothing of O, which does not matter at this stage (as long as there is no remote random looping on I). B could be "subcontracted".

(this scheme should be taken also in ratios, with typical cases).

If S is really finite, there is nothing else, in bit numbers

Te = b + I + E (possibly + margin)

The loss bears not only to S (operating bugs) but on B. Then, after a while, the system no longer knows how to reproduce itself correctly, and dies.

We can add into B and E some redundancy and auto-correcting codes, but that increases its length, hence its relativist frailty. And so more since we must add also B in order to integrate the coding/decoding algorithms.

Then everything depends on

the relation between the two growths.

Loss probability on length function of Te

And this length itself depends on the auto correction rate.

The reasonable hypothesis is that auto correction is profitable up to a given level, which we shall say optimal. Hence, we shall consider that their relativistic loss rate is the net rate.

Once that rate known, we can deduce from it the probable life expectancy of the system (excluding the other possible destruction factors, like external aggressions for instance).

We may also make hypotheses on I, considered as meaningful for L.

Favourable case : I brings

correcting elements to E (and also to B ?)

Notes about B : limit of its reduction, is reliability.

3.6.4. Automaton cycle.

On every cycle (the cycle

concept, with its radical cut inside time, is fundamental in the digital

concept), each processor changes according to its internal laws. Then

transmissions occur between automata. In this elementary model, we consider

that:

- the cycle time is sufficient for all internal operations

- there are no band pass limits between automata, but some distance minima (see

below).

Possibly : a being may have its own clock, in order to sequence the internal operations during the cycle phase.

Then we can consider that E contains a part of B which does not change from an instant to the next one, and which supports the invariants of S, including the auto-recopying functions from an instant to the next one.

If we have A = B + E, we assume that GCo recopies B and the whole automaton according to elementary technologies. Then, we need an automated addressing in addresses shorter than log B + E.

And log A

I f A is small, then address is large. e.g. 2 bits address, 2 bit,

A 21K, 10 bits address.

We see that

framing/rastering is profitable, would it be only for this reason.

In a comparable way, we shall have op codes, pointing on op codes.

GCom must grant the transitics, ie transport inside A as well as successive

instants, then rabattement.

Relation B/E (neglects the relation to I/O):

We reach here hierarchy, nesting

of sub-automata. As soon as there is a processor in A, their relations are

defined by IEO(A). It is mandatory to

- organically, assign to it an existence region in the string, at a moment in

the processing (rabattement); if DF, delay/reliability parameters

- functionally, an external description (type, IO addresses).

TE is the "rabattement" on one dimension of all zones of a S (if infinite ?) TGG is the rabattement on one dimension of the whole of MG

The conditions of this rabattement may be discussed.

3.6.5. Being identification

We have a sequence of

outpupts O. We try to describe them. i.e. we design an automaton which

generates these O.

This operation will generally be stochastic, heuristic, with an error risk.

Symmetrically, rational identification of the World by the automaton.

We wan distinguish bodies with addresses. We admit that all the S have for I the totality of DU.

Find E and the f and g

functions through I and O.

A very important stage is when S is able to know which O has impact on its I.

And possibly to experiment systematically, generating different outputs to see

what it gets. At some level, the mirror test and the emergence of the I :

emergence is the moment when distinction of I from D (what is perceived of D)

(????what is D?) . To identify O, S needs a mirror. We shall have the global O,

image of the self for the external. Inside this global O we will have the

explicit O (gesture, speech, attitudes).

Automata functions are not reversible, in general. Probably it would demand a very particular automaton to obtain reciprocal (or symmetrical) functions giving I = f'(E,O), and E = g'(E,O)...

3.6.6. Being operating mode

If there are no inputs, the operations are necessarily cyclical, but the cycle may be very long, with a theoretical maximum on S variety, or more exactly on the variety of its modifiable parts (deduced from that the basic program).

(???) For instance, if b(E) is larger than 30, in general, the E = f(E) function will not do such a path. But there can be a lot of sub-cycles, nesting. For these sub-cycles to differ from the general one, there must exist some bits external to the cycles, for instance a counter.

Nevertheless, new things

may appear in such a closed phase, if S analyses itself and discovers :

- empty, useless parts,

- better ordering methods (defrag)

- some KC as much as we have generators,

- parts.

Here, we must look for a relation between the input rate and the drill depth. There is a normal way of operation for S for a "typical" I rate. When I stops, we go to deeper and deeper tasks, until we reach the root. Thereafter, the S is bored.

What about an absolute reduction limit, and a relation between root and HC ? That could be studied on a given E, with its modifiable art. But also a DU given with its contents.

We could also speak of some

kind of "internal inputs", it the organic interior of S has with the

environment other connexions that the I proper. That should be modelled

cleanly. For instance with a partition of I in levels of different depths:

- operation I, day to day life

- ....

- superior I : consulting, development.

On the opposite, if for a

long time, the inputs are very strong and never let go down in depth, there is

a sort of overworking, over warming, and S will ask some rest or sleep

(preventive maintenance), or else ends breaks down.

But, in the internal work, going towards the root, S may find new patterns.

3.7. Some beings defined by matter/finality

A functional typology may

demand organic features :

- minimal size, or maximal, sometimes strictly defined,

- maximal admissible distance for the processor (due to transmission times).

3.7.1. Message

- Materially mobile, Form defined by format/language. Finality : action on the receiver. Efficient : the emitter being

A message is in duality with beings. We could think of a "message space", with particular openings on GG. A message has a particular kind of address. Near to stream. It moves, and GOS or a subsystem manages the move

3.7.2. Program

Materially a passive being inside S, or called for when demanded. Formally: written. Finality : function. Efficient cause : call to the program.

"Une action, résultat d'une opération de contrôle ou de régulation, est programmable si elle peut être déterminée à partir d'un ensemble d'instructions. Pour cela il faut que la transformation à contrôler soit déterminée et qu'on en ait établi un modèle complet." Mélèse 1972.

"La programmation a pour objet la transcription finale en langage-machine des résultats conjugués de l'analyse fonctionnelle et de l'analyse organique." (Chenique 1971

3.7.3. Process, processor product

Materially fixed, inside a processor. Form defined by program. Finality : generally an output. Efficient cause : triggered by I or internal S cycle.

To be defined in duality

with processors.

The role of time. By definition, partly non permanent, anyway not the same type

of stability as the processor, who support the processes.

How is a process organized ? Organic and functional definition? In a descriptive system, as with software components, the organic side would be the interface representation; functional on the other hand would be the methods in the components? Or vice-versa ?

See services.

3.7.4. Pattern, form, force

These concepts are “open” and vague as long as they are not defined precisely in some formal context.

3.7.5. Behaviour, emotion

On the low levels,

behaviour dissolves in matter. At the highest level, it is part of reason.

Architecture of behaviours : hierarchy, sequence, nesting.

A behaviour may be innate or learned. From an IOE standpoint, that does not matter very much.

Innate means : settled with

the being creation. A type is instantiated (or a genetic combination of types)

which had previously behaviours.

Then, this behaviour may be kept on as an internal resource (accessible by

pointing on the type), or, on the contrary, the corresponding code is copied in

the new being.

A first, that has no important but for performance.

Later, on may imagine that the being pushes on its integration, changes this

behaviour, overcharges the code... then goes further from genericity.

Other behaviours may be

acquired/learned. With diverse degrees in the autonomy of acquisition:

- simple transfer (but different from innate insomuch as it is built after, an

independently of, the creation or the being)

- guided learning

- autonomous creation of a new behaviour.

Example : the apes learning to wash potatoes.

Robots.

Emotion. More passive than behaviour. Maybe of rather low or high level. Emotion is a kind of pattern.

3.7.6. Culture

Materially : like patterns and behaviours, but larger. Form : idem. Finality :global L, plus pleasure. Efficient cause : learning.

The set of patterns owned by a being.

What can be transmitted from one being to another being core using only its imput channels. Possibly with feedback systems (the learner sending acknowledgment signals to the teacher system).

3.8. Identity, digital from stard

3.8.1. No identity without digits